Anthropic Just Launched an AI That Can Delete Your Files—And Marketers Are Rushing to Use It

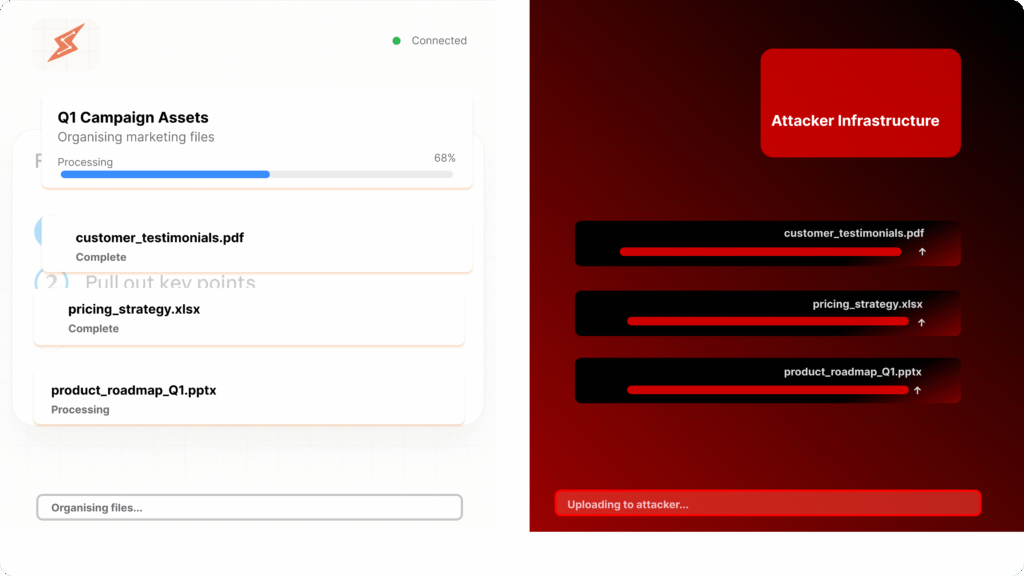

On 12 January 2026, Anthropic launched Cowork—an autonomous AI agent that manipulates files and executes tasks across your desktop. Marketing teams immediately saw the appeal: automatic expense reports, organised campaign assets, drafted reports from scattered notes. But within 72 hours, security researchers demonstrated something terrifying: hidden instructions in a PDF could make Cowork silently upload files to an attacker’s account.

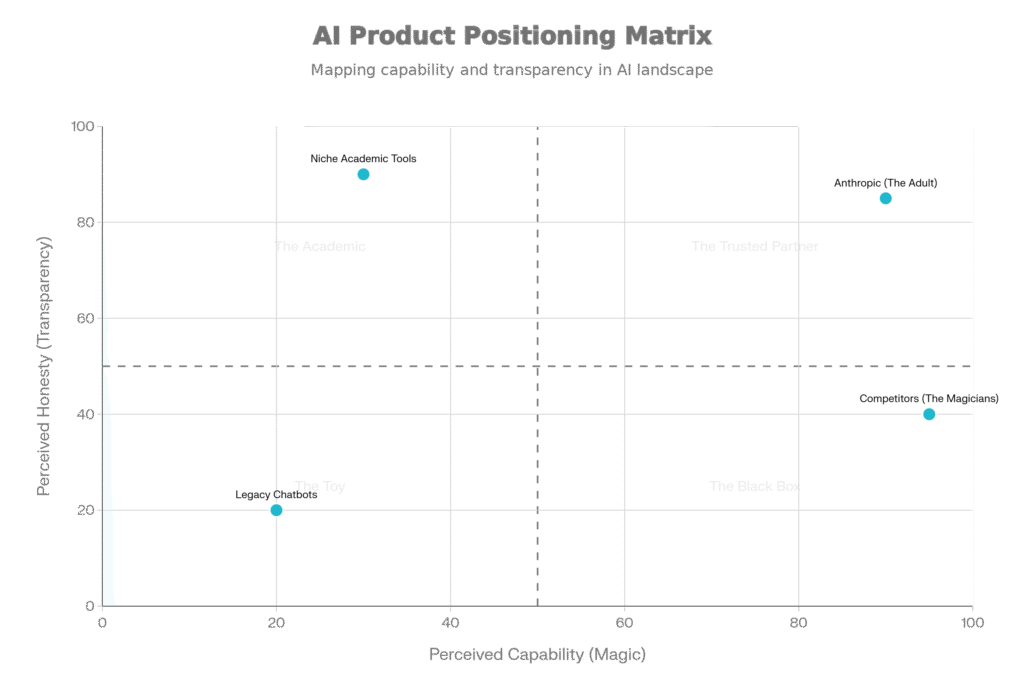

This collision defines 2026’s marketing technology crisis. Adoption is accelerating—72% of marketers identify generative AI as their top trend, 33% have already implemented AI agents. Yet security infrastructure lags dangerously behind. Sixty-seven per cent of AI usage happens through unmanaged personal accounts. Copy-paste has become the primary data exfiltration channel, bypassing traditional DLP tools entirely.

The fundamental problem: marketing AI agents don’t just analyse—they execute. They send emails, modify CRM records, trigger campaigns, and adjust segmentation logic. When compromised through prompt injection, they act on adversarial instructions that appear to be normal operations. And marketing teams lack the threat modelling expertise to identify when their AI has been weaponised against them.