In what might be Silicon Valley’s most intriguing—and expensive—collaboration in years, OpenAI CEO Sam Altman and legendary Apple designer Jony Ive have officially joined forces to design the next era of human-AI interaction. Their partnership, made official with OpenAI’s $6.5 billion acquisition of Ive’s stealth hardware startup io, promises something beyond a shiny new gadget. It’s a bold attempt to rethink how we live with machines.

Altman and Ive aren’t just making another voice assistant or smartwatch. They’re envisioning a family of AI-native devices that blend seamlessly into our environment—ambient tools that know us, anticipate us, and maybe even understand us. Forget keyboards and screens. If they succeed, these devices will make our phones feel as quaint as rotary dials.

A Quiet Beginning With Big Intentions

The collaboration started quietly back in 2023, built on mutual curiosity and a shared dissatisfaction with today’s tech interfaces. Ive, still riding the halo of his Apple years, had formed LoveFrom to work on design projects with moral purpose. Altman, meanwhile, was leading OpenAI into a new phase of public dominance. Together, they saw a missed opportunity: AI was getting smarter, but the devices we used to interact with it were stuck in the past.

“Computers are now seeing, thinking, and understanding,” the duo noted. “Despite this unprecedented capability, our experience remains shaped by traditional products and interfaces.” Translation: Siri and Alexa aren’t it. We need new forms, new gestures—maybe even new instincts.

For Ive, this moment feels personal. “This is the first time since Steve that I’ve felt this kind of creative alignment,” he said, evoking his long and storied collaboration with Steve Jobs. No pressure. (source)

A Billion-Dollar Bet on Design

In 2024, Ive co-founded io, a startup engineered specifically for this partnership. Now under OpenAI’s wing, the company brings together a reunion cast of Apple veterans: Scott Cannon, Evans Hankey, Tang Tan, and other design heavyweights. It’s not just nostalgia; it’s a calculated concentration of institutional memory and industrial design firepower.

With a 55-person team of engineers, physicists, and designers, io operates as an internal hardware division within OpenAI, while LoveFrom remains independent, taking charge of design direction. The structure preserves Ive’s creative autonomy while embedding his aesthetic into the core of OpenAI’s future.

The Vision: Not Wearable, Not Replaceable—Ambient

Altman and Ive aren’t building a smartphone killer. Instead, their devices are described as ambient companions—AI-powered tools that respond to voice, touch, gesture, and possibly even emotion. Think less “Hey Siri” and more “subconscious co-pilot.”

Altman described the concept as moving from “AI as a tool you start, to AI as a presence in your life.” The goal is seamless integration, not attention-hogging screens. These devices will work with you, not for you—an idea that sounds charming until you remember that Clippy also wanted to help.

The product design borrows heavily from Ive’s aesthetic minimalism. Some prototypes reportedly resemble polished metal pendants or sleek amulets—objects designed to be beautiful, non-intrusive, and slightly mysterious, like if HAL 9000 joined Goop. (source)

Prototypes, Promises, and Production

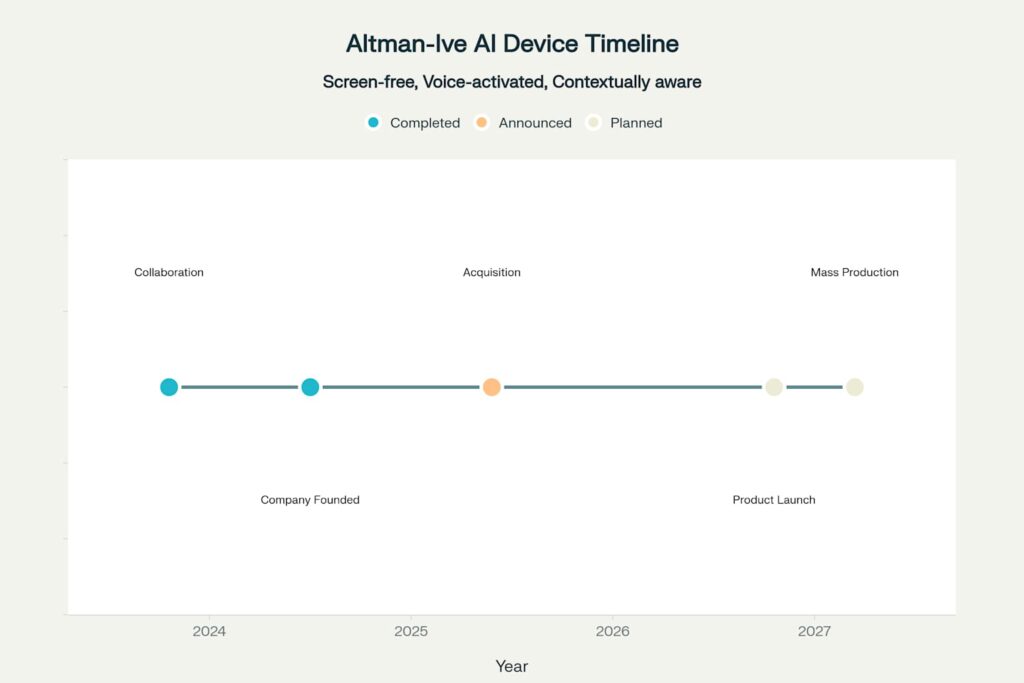

Timeline of key milestones in the Altman-Ive AI hardware initiative, from early collaboration to planned mass production in 2027. Source: OpenAI projections.

Altman has already taken one of the prototypes home. “It’s the coolest piece of technology that the world will have ever seen,” he gushed, like a proud dad who thinks his AI device just won the science fair.

Launch is expected in late 2025 or early 2026, with an ambitious goal of delivering 100 million units—a figure that would eclipse the launch of the iPhone, PlayStation, and most religions. OpenAI is reportedly in talks with manufacturers to scale up production, because nothing says “we’re serious” like signing contracts before the public knows what the product is.

Innovative Products Emerging from the Sam Altman-Jony Ive Collaboration: A New Era of Ambient AI

The partnership between Sam Altman’s OpenAI and Jony Ive’s design collective LoveForm, formalized through the $6.5 billion acquisition of io, represents a convergence of cutting-edge AI and minimalist industrial design. Their collaboration aims to redefine human-computer interaction by moving beyond screen-based interfaces toward ambient AI devices that integrate seamlessly into daily life. Below is an analysis of the innovative products likely to emerge from this union, informed by disclosed prototypes, strategic goals, and industry trends.

1. Ambient AI Companions: The “Third Core” Device

This device is shaping up to be the centerpiece of the collaboration’s ambitions. Roughly the size of a compact badge or small pendant, it is intended to be worn, pocketed, or placed unobtrusively nearby. Key features reportedly include an always-on microphone, a depth-sensing camera, and secure neural processing units for on-device inference. Unlike smartphones that demand constant attention, this device quietly observes, interprets, and assists. Imagine walking into your kitchen and it automatically suggests a lunch recipe based on fridge contents, or gently reminds you about a call after overhearing you mention it.

More than a voice assistant, it is designed to feel intuitive and deeply personal, responding to environmental triggers, your tone of voice, and even your posture. It integrates with your devices but stands apart from them—an intelligence that operates without the tyranny of touchscreens.

2. AI-Native Creative Tools

Concept Art of Ambient AI Interface in Wearable Form

At the heart of these tools is the idea that creativity should be accessible, not gated by complex software. A next-generation desktop terminal, possibly shaped like a low-profile console or drawing slate, could allow users to brainstorm and iterate using speech, touch, and gestures. Sketch a chair and have it rendered in 3D. Describe a character and get draft illustrations or animations in real time.

Smart glasses, another rumored prototype, may project interactive design layers into the physical world, turning a designer’s entire studio into a collaborative canvas. Rather than a VR headset experience, this would be more lightweight, possibly using ultra-clear lenses and bone-conduction audio to allow for seamless mixed reality.

3. Health and Wellness Monitors

These aren’t your average fitness bands. Ive and Altman reportedly envision devices that detect respiratory patterns, stress levels, and behavioral anomalies by integrating biofeedback sensors with machine learning models trained on vast health data sets. Think of a pendant that subtly alerts you to dehydration, or a wearable that tracks sleep apnea through audio and vibration analysis.

The goal is proactive, preventative care—not just numbers, but insights. These devices would connect directly to digital health services, offering digestible summaries or even initiating clinical support if a concerning trend is detected.

4. Educational and Productivity Assistants

The education-focused concepts include a small tabletop orb-like assistant, which could function as a tutor, study buddy, or meeting concierge. Equipped with voice interaction and light-based feedback (e.g., glows different colors to represent status), it could explain difficult concepts out loud or summarize articles placed next to it using computer vision.

In professional environments, the assistant might handle your daily briefing, manage emails, and summarize meetings. One version even includes a spatial audio projector to simulate virtual presence in hybrid meetings.

5. Ethical and Accessibility-Centric Design

All devices are expected to include multiple input modes: voice, gesture, haptics, and even customizable touch surfaces. Accessibility is core to the design—adaptive interfaces for neurodiverse users, multilingual support, and physical modularity will allow wearers to clip, wear, or mount devices in ways that fit their specific needs.

An “accessibility-first” prototype even features braille-inspired tactile surfaces for the visually impaired and an AI assistant that translates visual surroundings into spatial audio narratives.

Challenges

Mass production, energy efficiency, and data privacy pose significant barriers. OpenAI must address these while maintaining the elegance and functionality expected from Ive’s team.

The Subscription Model: AI as a Utility

OpenAI plans to distribute the hardware via a subscription model, potentially bundled with ChatGPT access. “If you’re a subscriber to ChatGPT, we should just send you new computers,” Altman told staff. That’s either revolutionary thinking or an aggressive mail-order campaign.

By treating hardware as a service rather than a luxury purchase, OpenAI hopes to lower barriers to entry and create a long-term user base. The model could also enable iterative hardware updates and tighter integration with AI services, assuming users are cool with their new “computer” arriving like a magazine.

Challenges: From Competition to Carbon

Of course, there’s no shortage of potholes on the road to ambient AI nirvana. The consumer hardware market is a brutal, capital-intensive battlefield littered with failures—especially AI devices that promise companionship and end up as overpriced paperweights. Just ask Humane’s AI Pin or Rabbit R1, both of which underwhelmed critics and baffled users. Ive didn’t mince words, calling them “very poor products.” (source)

And then there’s the money. OpenAI is forecasting $44 billion in losses before profitability sometime after 2029. Not exactly a reassuring timeline for a company entering hardware manufacturing, where mistakes are expensive and scaling is chaotic. (source)

Environmental concerns loom large, too. Ambient AI means constant processing, cloud access, and data storage—all of which burn water, energy, and attention spans. One estimate suggests a single AI-powered email may use as much water to process as a standard water bottle.

Finally, there’s the inconvenient question: do people even want this? A recent study found that 46% of Gen Z would prefer to live in a world without the internet. Selling them an always-on AI presence might feel more dystopian than dreamy.

The Verdict: Genius, Folly, or Both?

The Altman-Ive collaboration is audacious, strange, and packed with ambition—the kind of tech moonshot that usually only exists in TED Talks or pitch decks. They want to own the interface, redefine personal computing, and casually dethrone the smartphone—all in under two years.

If they succeed, the result could be the most significant leap in consumer technology since the iPhone. If they don’t, well, it’ll still make a fascinating postmortem for Wired.

The real question is not whether they can build it. It’s whether the rest of us will want to live with it.