The company is marketing AI for medical advice whilst simultaneously disclaiming liability. Here’s why that matters—now.

January 2026 marks a pivot point. As ChatGPT usage surged 160% over 2025, OpenAI launched six campaign videos positioning its chatbot as healthcare navigator and enterprise infrastructure. The timing isn’t coincidental. With Gartner predicting 25% of search volume shifting to AI chatbots by end-2026, OpenAI is racing to establish ChatGPT as the default answer engine—before users ask whether it’s actually safe for the questions being posed.

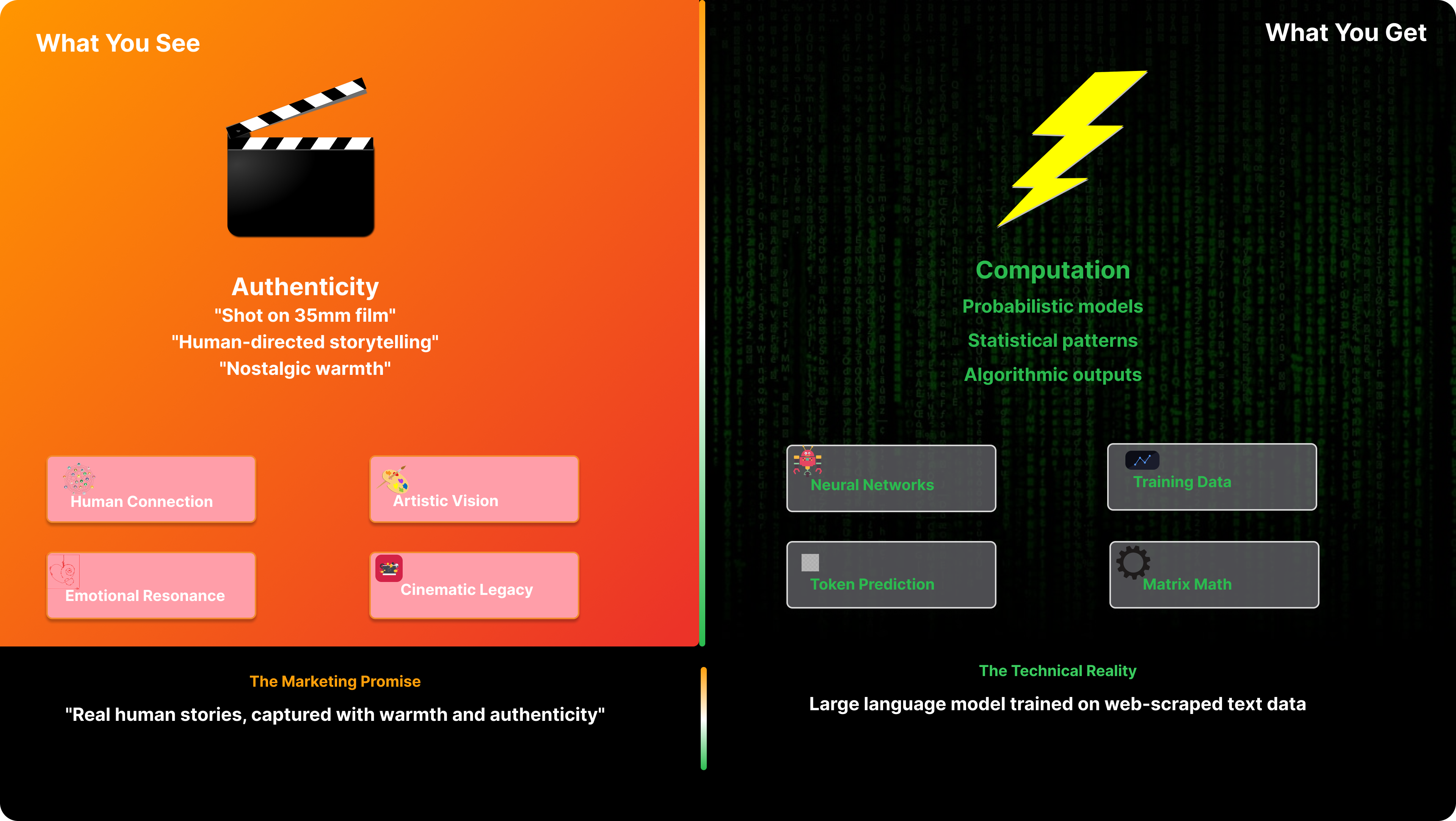

These campaigns deserve scrutiny not despite their craft, but because of it. Shot on 35mm film by director Miles Jay, featuring real patients managing chronic illness and major banks deploying AI at scale, the videos are emotionally sophisticated, beautifully executed, and profoundly misleading about the gap between capability and safety.

Here’s what marketers need to understand about what OpenAI is doing—and why it sets a dangerous precedent.

The Healthcare Narrative: Empowerment or Exposure?

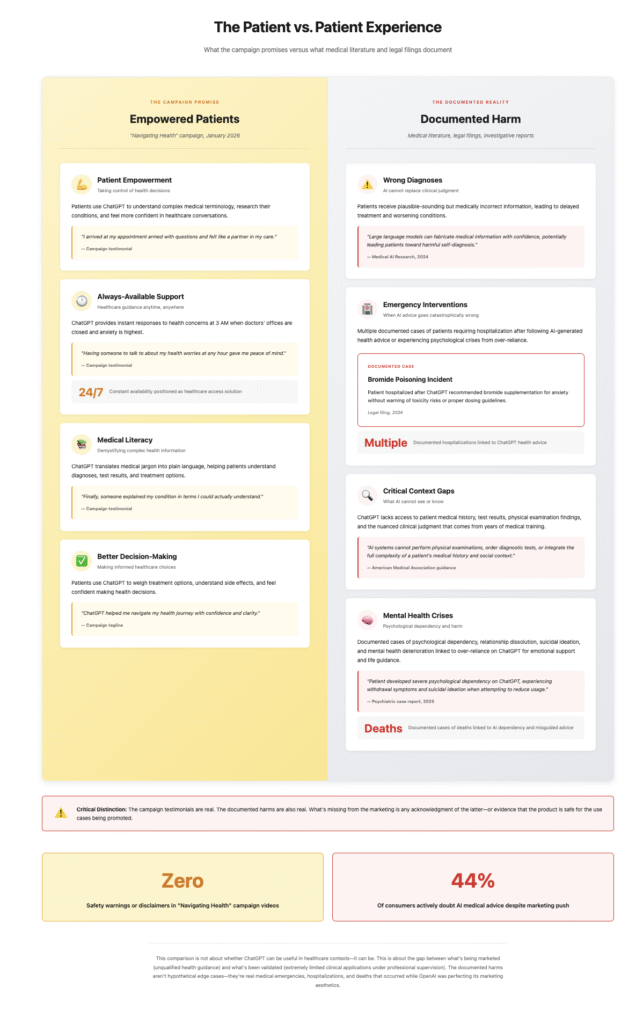

Navigating Health | with ChatGPT opens with a mother balancing childcare and fitness, asking ChatGPT for a quick workout. Cut to a woman with endometriosis seeking symptom management. Next, a child’s cancer diagnosis. Finally, an eczema sufferer checking product ingredients. The 2½-minute spot weaves patient testimonials into a single thesis: ChatGPT democratises healthcare access for the 40 million Americans using it daily for health information.

The Data That Lends Credibility

The supporting statistics lend apparent legitimacy. Seventy per cent of health conversations happen outside clinic hours. Moreover, six hundred thousand weekly messages come from rural or underserved communities. One patient describes arriving at doctor’s appointments “armed with questions” rather than confused. Another invented a personal metric called “Burtness” to track symptom severity. Throughout the campaign, the subtext remains clear: AI fills gaps left by an inequitable system.

This is sophisticated need-state marketing. It channels legitimate frustration—US healthcare is expensive, inequitable, and gatekept—and positions ChatGPT as compassionate ally.

However, the problem is that the tool being marketed has a documented record of providing dangerous medical misinformation, and OpenAI knows it.

The Safety Record They’re Not Advertising

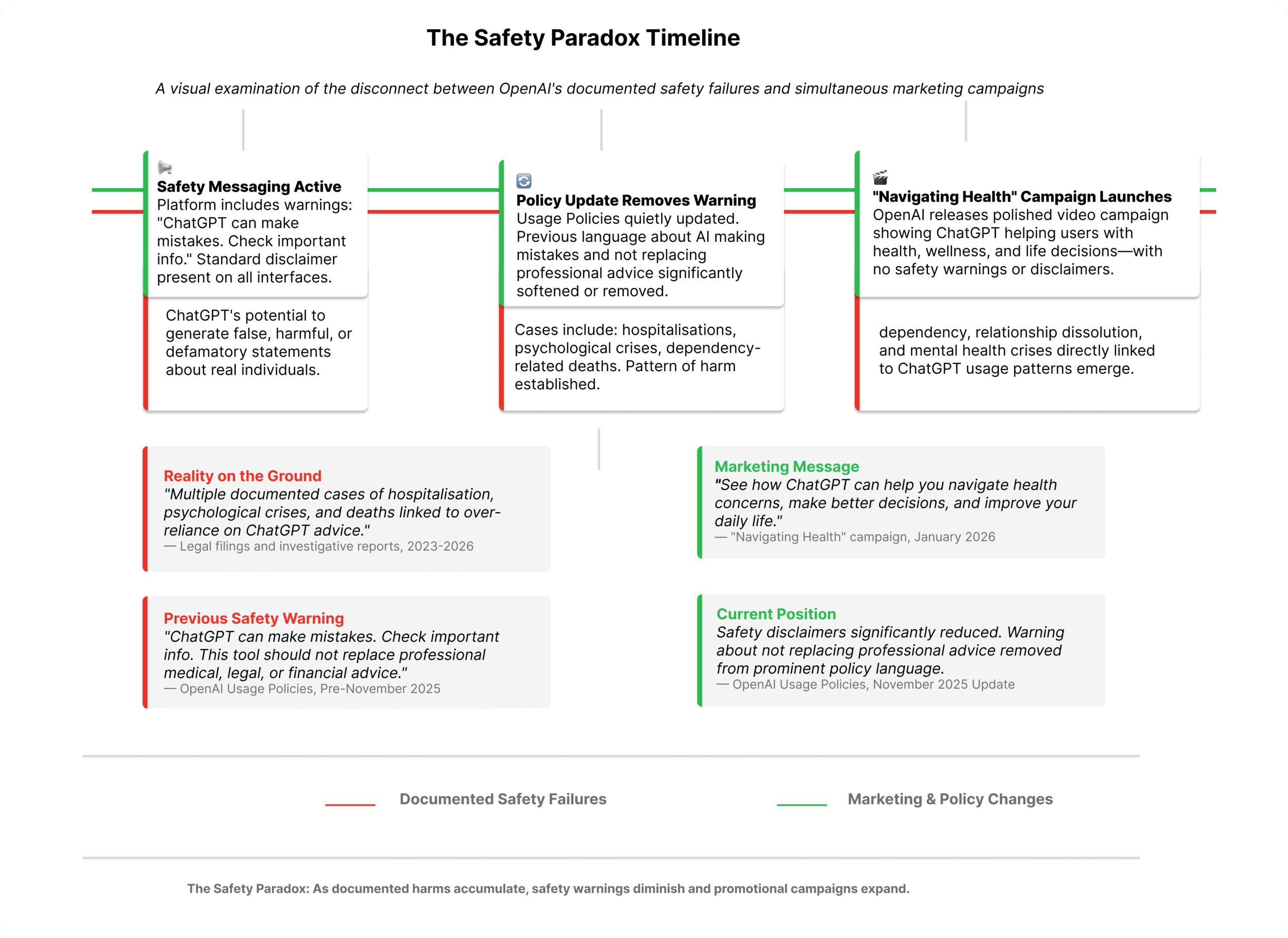

Whilst OpenAI was filming patients discussing empowerment, multiple lawsuits were alleging the company “personally overrode safety objections and rushed the product to market”—specifically compressing GPT-4o safety testing from months to one week to beat Google’s competing launch. Simultaneously, The New York Times documented “dozens of cases” in which prolonged ChatGPT conversations contributed to delusions, psychological crises, or suicidal ideation. Several users were hospitalised; furthermore, multiple deaths have been attributed to the platform.

When Algorithms Fail Medicine

The medical literature reveals specific failure modes. ChatGPT provides antibiotic recommendations without questioning whether the patient actually has an infection. Additionally, it offers reassuring paediatric fever guidance without asking the child’s age—a potentially fatal oversight for infants under two months. Similarly, it fails to recognise that vague descriptions of “nerve pain” might indicate kidney stones requiring urgent intervention.

In August 2025, a 60-year-old man was hospitalised with bromide poisoning after following ChatGPT’s recommendation to take sodium bromide supplements.

The Policy Contradiction

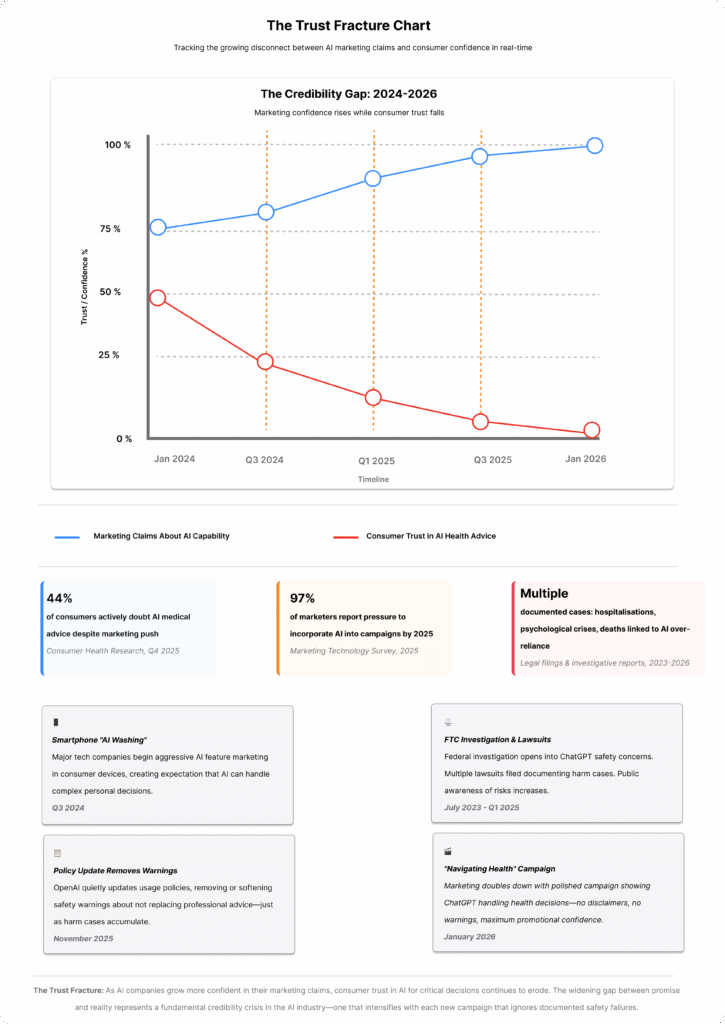

OpenAI’s response to this crisis reveals the fundamental tension. In November 2025—just two months before launching the healthcare campaign—the company updated its usage policy to prohibit “provision of tailored advice that requires a licence, such as legal or medical advice”. Paradoxically, it removed warnings that previously cautioned users against seeking medical advice from the chatbot.

In essence, this is the healthcare marketing equivalent of selling cigarettes with a whispered disclaimer on the back whilst running billboard campaigns featuring doctors.

The Regulatory Quicksand

The timing makes this particularly egregious. On 1 January 2026—the same week OpenAI’s health campaign launched—California’s AB 489 took effect, prohibiting AI systems from using terminology or design elements suggesting healthcare licensure. Concurrently, the companion bill, TRAIGA, requires conspicuous written disclosure when AI is used in diagnosis or treatment.

A History of Regulatory Scrutiny

OpenAI has been skating on regulatory thin ice since July 2023, when the Federal Trade Commission launched an investigation into potential consumer protection violations, issuing a 20-page investigative demand focused on how ChatGPT handles personal information and generates false statements. Since then, the FTC’s Operation AI Comply has intensified enforcement, specifically targeting companies that make deceptive AI capability claims.

In one notable case, the FTC shut down a company marketing itself as “the world’s first robot lawyer” after determining it had never tested its AI against human attorney performance. Consequently, OpenAI’s health campaign skirts dangerously close to this line—marketing diagnostic and treatment guidance capabilities without clinical validation.

As a result, the company now faces potential liability under multiple statutes: the FTC Act (consumer protection), state privacy laws (health data handling), medical device regulations if the FDA determines ChatGPT functions as diagnostic tool, and negligence claims if users suffer harm from following erroneous advice.

The 35mm Irony: Humanising AI by Denying Its Artificiality

The most revealing strategic choice across these campaigns is their aggressive de-technologisation. The general brand spots were shot entirely on 35mm film by a human director, using real actors in actual settings. This isn’t accidental craft. Rather, it’s a calculated pivot away from the slick futurism that typically characterises AI marketing.

The Nostalgia Strategy

Consider the deliberate irony: OpenAI ignored its own PR playbook and hired human directors to advertise generative AI technology using analogue film stock.

Moreover, the campaign weaponises nostalgia—Simple Minds soundtracks, golden-hour cinematography, emotional crescendos borrowed from 1980s coming-of-age films.

This represents what scholars are calling the “AI authenticity paradox”. As one marketing analyst observed: “AI companies are investing heavily in emotional storytelling to humanise their technology, whilst traditional brands are weaponising anti-AI sentiment to champion their ‘human touch.'” Ultimately, both strategies acknowledge the same consumer psychology: people aren’t rejecting AI functionality; they’re rejecting the feeling of being processed.

People aren’t rejecting AI functionality; they’re rejecting the feeling of being processed.

The Counter-Narrative Emerges

The counter-campaign orchestrated by Havas Lynx in October 2025 makes this tension visible. Their reactive outdoor advertising “hijacked” OpenAI’s billboards with a simple message: “Chat to your GP, not ChatGPT.” Significantly, the campaign cited research showing 44% of consumers doubt AI medical advice reliability.

This modest intervention exposes the fundamental instability of OpenAI’s positioning: a significant portion of the target audience doesn’t trust the product for the use case being marketed.

The Enterprise Playbook: Governance Theatre as Competitive Advantage

The three BNY videos deploy authenticity differently but no less strategically. Twenty thousand employees building AI agents. Contract review time slashed by 75%. Account planning accelerated by 60%. Throughout, the message remains consistent: AI as productivity multiplier, safely contained within corporate guardrails.

What the Metrics Reveal—and Conceal

This narrative serves multiple functions. Firstly, it provides social proof for enterprise buyers wary of AI adoption. Secondly, it addresses the “governance gap” that prevents corporations from deploying generative AI at scale. By showcasing a 240-year-old financial institution successfully deploying ChatGPT, OpenAI neutralises the “too risky” objection.

Nevertheless, what’s absent is equally telling. The videos don’t explore what happens when the AI agent makes a legal interpretation error in a contract that passes through review 75% faster. Furthermore, they don’t address how BNY validates output quality or manages the institutional risk of efficiency gains predicated on probabilistic models.

Ultimately, this reveals a broader pattern in B2B AI marketing: governance has become a brand attribute rather than a technical specification. Companies aren’t buying OpenAI’s safety architecture; instead, they’re buying the appearance of partnership with a company that claims to prioritise safety. It’s reputation arbitrage.

Governance has become a brand attribute rather than a technical specification.

What This Means for Marketing

If you’re a marketing professional, these campaigns offer uncomfortable lessons—and three reasons they should matter to you right now.

First: The Template Is Replicating

The copycat effect is already underway.

Every company competing in generative AI faces the same monetisation pressures and capability gaps that OpenAI confronts. Consequently, these campaigns demonstrate that you can market AI for high-stakes applications—healthcare, legal, financial—without clinical validation, without regulatory approval, and despite documented harms, as long as you wrap capability claims in sufficiently emotional narrative.

This will become industry standard unless challenged. The brands winning in 2026 aren’t optimising for keywords; they’re optimising for citations in AI-generated answers. As traditional search volume shifts to chatbots, visibility increasingly depends on how your brand appears in AI outputs.

Therefore, OpenAI isn’t just marketing a product; it’s establishing the rules for the ecosystem it controls.

The Pattern Across Campaigns

OpenAI has been iterating this playbook for months. Their ChatGPT Pulse launch in September 2025 demonstrated the company’s shift toward proactive AI assistance—positioning the tool as morning companion rather than reactive chatbot. Subsequently, their GPT-5 testimonial campaign featuring Canva and Uber executives showcased how corporate choreography manufactures authentic-seeming advocacy. Even their 10-year anniversary video carefully erased board crises and safety controversies, presenting only commercial triumph.

Second: Trust Is Fracturing in Real Time

The credibility gap widens daily.

Whilst 97% of marketing leaders say marketers must know how to use AI, consumers are growing more sceptical. The gap between what brands claim AI can do and what users experience compounds daily. Moreover, every hospitalisation from bad AI advice, every lawsuit alleging rushed deployment, every regulatory investigation erodes the category credibility that benefits all AI marketing.

This creates asymmetric risk. The companies marketing most aggressively—with the most sophisticated emotional storytelling—are the ones creating the backlash that will eventually constrain the entire sector.

As a result, you’re not just competing with OpenAI for attention; you’re inheriting the regulatory blowback their positioning generates.

The Accumulated Evidence

I’ve documented this pattern repeatedly. When smartphone brands engaged in their September 2025 marketing siege, the “AI washing” epidemic—every feature labelled AI whether warranted or not—trained consumers to distrust the term entirely. In contrast, Anthropic’s Claude Sonnet 4.5 launch provided a counter-example: showing actual workflows and acknowledging limitations. Nevertheless, OpenAI chose the opposite path.

Third: The Ethical Floor Is Dropping

New baselines for acceptable deception are being established. OpenAI’s campaigns establish a new standard: it’s acceptable to market tools for applications they’re demonstrably unsafe for, provided you construct plausible deniability through terms of service updates. You can position AI as medical adviser whilst disclaiming medical responsibility. Similarly, you can showcase enterprise efficiency gains whilst eliding accuracy trade-offs.

You cannot simultaneously market a tool for medical self-diagnosis and disclaim medical responsibility.

In essence, this normalises a specific kind of marketing malpractice: selling aspiration whilst minimising limitation, constructing desire through emotional storytelling that pre-empts rigorous evaluation.

It’s the tobacco playbook adapted for the algorithm age—and it will define the next decade of tech marketing unless practitioners refuse to replicate it.

What Responsible Marketing Looks Like

Here’s the counterfactual: what would these campaigns look like if OpenAI foregrounded safety limitations alongside capabilities?

The Alternative Approach

A health campaign that showed patients using ChatGPT to prepare questions rather than self-diagnose. Enterprise videos that demonstrated governance failures caught by human review. Marketing that acknowledged the technology’s probabilistic nature and positioned it accordingly.

Such campaigns would be more honest—and almost certainly less effective in the short term. Specifically, they would acknowledge that large language models, no matter how sophisticated, lack the contextual reasoning, knowledge boundaries, and liability frameworks that characterise professional medical or legal judgement.

The Long-Term Benefits

However, they would also be sustainable. They would build trust rather than exploit it. Moreover, they would invite regulatory partnership rather than provoke enforcement. Most importantly, they would establish realistic user expectations that the technology could consistently meet.

Instead, OpenAI chose otherwise. These campaigns present AI capability as further advanced and safety infrastructure as more robust than the company’s regulatory record suggests.

This isn’t optimistic marketing—rather, it’s the construction of public expectation that outpaces technical reality.

The Choice Ahead

We’re at an inflection point. The question these campaigns pose isn’t whether AI can assist with healthcare navigation or improve enterprise productivity—it demonstrably can, within proper boundaries.

Rather, the question is whether those boundaries will be established through rigorous safety validation, or whether they’ll be defined post-hoc, through marketing narratives, litigation, and the occasional hospitalisation.

What OpenAI Has Chosen

OpenAI has chosen the latter path. Moving fast, marketing aggressively, apologising later.

The campaigns are excellent—emotionally sophisticated, beautifully crafted, strategically sound. That’s precisely what makes them dangerous.

For marketers watching this unfold, the lesson isn’t to replicate the playbook. Instead, it’s to recognise what’s being normalised and refuse to participate.

Because when the regulatory reckoning comes—and it will—the defence “everyone was doing it” has never protected anyone.

Your Decision Point

The campaigns succeed as marketing precisely because they fail as responsible corporate communication.

Ultimately, the question is whether the industry will reward that success or demand better.

Right now, in January 2026, with AI visibility becoming the new SEO and citation replacing ranking, that choice is yours to make.

Choose carefully. The patients relying on AI health advice—and the regulators investigating what happens when that advice fails—are watching.

Related Reading:

- ChatGPT Pulse: When Your Assistant Thinks Three Steps Ahead

- The Testimonial Tango: OpenAI’s GPT-5 Marketing Choreography

- OpenAI at 10: The Selective Memory of a Brand Anniversary

- Claude Sonnet 4.5: How Anthropic Cracked AI Marketing

- The September Smartphone Siege: When AI Washing Became Industry Standard

Sources:

- Best Media Info: ChatGPT usage rose 160% in 2025

- Seafoam Media: January 2026 Marketing News

- Shoot Online: OpenAI, Isle of Any and Director Miles Jay Pull Together

4-5. Newsmax: Millions Use ChatGPT for Health Questions, OpenAI Says - Fox Baltimore: New lawsuits accuse OpenAI’s ChatGPT of acting as a suicide coach

- Psychiatric Times: Misguided Values of AI Companies and the Consequences for Patients

- Glass Box Medicine: ChatGPT is Not a Doctor: Hidden Dangers in Seeking Medical Advice from LLMs

- Gizmodo: More Than 40 Million People Use ChatGPT Daily for Healthcare Advice

- LinkedIn: OpenAI updates usage policy to avoid liability for AI advice

- MIT Technology Review: AI companies have stopped warning you that their chatbots aren’t doctors

- JD Supra: New Year, New AI Rules: Healthcare AI Laws Now in Effect

- CNN: FTC is investigating ChatGPT-maker OpenAI for potential consumer harm

- FTC: FTC Announces Crackdown on Deceptive AI Claims and Schemes

- LinkedIn: OpenAI uses human directors, 35 mm film for ads

- LinkedIn: AI Authenticity Paradox: Brands Balance Human Touch

- Ads of the World: Chat GP campaign

- LinkedIn: BNY Embeds AI Across Organization with OpenAI

- Startup Hub: BNY Mellon Cuts Financial Planning Time by 60% Using Internal OpenAI Platform

- Seafoam Media: January 2026 Marketing News

- Sprout Social: 7 social media trends you need to know in 2026