Not with “time spent” or “meaningful social interactions”, those old slogans gathering dust in Menlo Park, but with something far more cinematic: ex‑FBI agents, “constellations of indicators”, and an AI early‑warning system for school shooters. Conveniently, this heroism plays out on the same platforms where the attention economy still hums along, ad auctions and all.[ppl-ai-file-upload.s3.amazonaws]

This new Meta safety film is not just a corporate PSA. Instead, it is a template for how platforms will sell surveillance as comfort – much like the way brands now choreograph emotion and algorithmic spectacle in work like Swiggy’s “people‑powered” anthem campaigns – and a warning for marketers who still think “brand safety” is about avoiding swear words near their pre‑rolls, rather than police reports and data pipelines.[ppl-ai-file-upload.s3.amazonaws]

(Internal link: “how platforms will sell surveillance as comfort” ).

“This isn’t just a safety film; it’s a sales deck for surveillance dressed up as public service.”

1. The two‑minute thriller where Meta is the good guy

The video is simple enough: a short, sombre piece titled Meta’s Early Detection and Disruption Work. In it, we meet Emily Vacher, a former FBI agent who has spent about 15 years on Meta’s law enforcement outreach team, describing how her group helped stop two potential school shootings – one in Houston, one in Queens.[ppl-ai-file-upload.s3.amazonaws]

The plot in three moves

The beats are textbook.

First comes the news‑style voiceover: parents in shock, a “possible plot to massacre students”, and the familiar arc of panic followed by relief. Then we are introduced to the protagonist: ex‑FBI, now working inside Meta.[ppl-ai-file-upload.s3.amazonaws]

After that, the film offers two clean success stories. In February 2025, Meta identifies two students planning a mass shooting, sends a report to the FBI, and “48 hours later” Vacher hears that they’ve stopped a school shooting. A few months later, a student posts an image on Instagram while sitting in class in Queens; Meta alerts the FBI and NYPD, and “88 minutes after our report” the student is in custody and the gun recovered.[ppl-ai-file-upload.s3.amazonaws]

The narrative then pivots. A small team of women in law enforcement outreach, working with “Instagram forensics” and “the power of AI”, set out to find “people who are potentially going to be school shooters”. There is “research”, there are “experts”, and there is that comforting statistic: in about 80 per cent of cases, school shooters signal their intent beforehand.[pmc.ncbi.nlm.nih][ppl-ai-file-upload.s3.amazonaws]

What the story leaves out

On a first watch, the film feels like a tasteful corporate reel. Once you look again, though, it resembles a manifesto for how platforms want to be seen in 2026: as a kind of privatised early‑warning system for the state – and by extension, for you, the viewer, whose children they protect while you’re at work.[ppl-ai-file-upload.s3.amazonaws]

Plenty is missing. The film never acknowledges how these platforms can also intensify grievance, isolation or extremism. It excludes student voices, teachers and mental health professionals, and it sidesteps mistakes, edge cases and contested interventions altogether.

Ultimately, social media appears not as a risky environment, but as a neutral surface where dangerous people accidentally leave clues. In that world, Meta becomes the sharp‑eyed detective that notices what no one else does.[ppl-ai-file-upload.s3.amazonaws]

“The only people who get to speak are the platform and the police. Everyone else is scenery.”

2. Safety as a brand platform: who gets to be the hero?

The first big narrative move is subtle: social networks do not create or amplify risk; they simply detect it. The “80 per cent” line does heavy lifting here. Crucially, serious research backs the idea that would‑be shooters often communicate violent intent, or what threat assessors call “leakage”. Studies of mass shootings and school attacks in the US have found that many perpetrators talk, post, or message about violence in advance, especially in school settings.jamanetwork+2[ppl-ai-file-upload.s3.amazonaws]

From leakage to legitimacy

Here is where the sleight of hand happens. The film moves from “people leak signals” to “therefore, Meta must scan, interpret, and escalate those signals at scale – and you should feel reassured that it does”.[ppl-ai-file-upload.s3.amazonaws]

Those risk factors quietly become design justification. Leakage, in the research, often looks like a cry for help as much as a boast; the JAMA Network Open study on mass public shootings describes leakage as a critical moment for mental health intervention, not just a police response. Nevertheless, the film only shows law enforcement outcomes: arrests, confiscated weapons, parents in relief.ojp+1[ppl-ai-file-upload.s3.amazonaws]

In other words, the video narrows the cast of legitimate actors. The serious characters are the platform and the state. Everyone else is either a potential victim or a potential threat.[ppl-ai-file-upload.s3.amazonaws]

The ex‑FBI aesthetic

Marketers will recognise the device. Vacher’s FBI background gives Meta borrowed seriousness. She appears calm and credible, framed as a public servant who changed desks, not sides.[ppl-ai-file-upload.s3.amazonaws]

That aesthetic matters. When an ex‑FBI agent talks about “reports”, “research” and “outreach”, it feels less like a content moderation decision and more like a national security mission. For many viewers, questioning that arrangement will feel almost indecent. If you challenge the story, do you also challenge the fact that two school shootings were allegedly prevented?[ppl-ai-file-upload.s3.amazonaws]

This is the rhetorical trick. The film offers a moral test so blunt that most people decline to engage with the fine print.[ppl-ai-file-upload.s3.amazonaws]

“If you question the story, you sound like you’re against stopping school shootings. That’s the genius – and the danger – of the framing.

3. The AI plot device: omniscient, opaque, and inexplicably polite

Now to the bit that will make every AI‑flavoured marketer wince in recognition.

The video mentions that the team “needed to partner with Instagram forensics for the power of AI so that we can find people who are potentially going to be school shooters”. It also notes that they look for “a constellation of indicators” and, as the tech evolves, they “can get better at this”.[ppl-ai-file-upload.s3.amazonaws]

Buzzwords doing quiet work

On the surface this sounds advanced and reassuring. Underneath, it is almost aggressively vague.

For example, which indicators matter? Keywords, images of weapons, meme aesthetics, follower networks, previous reports, location trails? How high is the false positive rate? How many teenagers trigger the system because they post dark humour, song lyrics, or gaming screenshots? And how often does the model misread context in non‑US communities or non‑English languages?

The film answers none of these questions. AI appears as a benevolent black box: tireless, impartial, always “getting better”, and reliably on the right side.[ppl-ai-file-upload.s3.amazonaws]

Researchers and practitioners see a more complicated picture. AI‑driven threat detection tools can help surface signals from huge volumes of data, yet they also inherit biases and blind spots from their training data and deployment context. Moderation systems already struggle with satire, reclaimed slurs, coded speech and cultural nuance. Now similar stacks are being asked to infer who might “potentially” carry out a school shooting.ojp+1[ppl-ai-file-upload.s3.amazonaws]

From recommender system to armchair psychologist

It is hard not to see the irony here. We now expect the same category of systems that once pushed conspiracy content for engagement to retrain as clinical threat assessors.[ojp]

The JAMA leakage study underlines that many shooters who leaked intent were suicidal and had prior contact with counselling services. Leakage was often more “cry for help” than villain monologue. The authors frame this as an opportunity for mental health and community intervention.pmc.ncbi.nlm.nih+1

In the video, that nuance simply vanishes. The machine sees; the team responds; the police act; the parents exhale. For marketers, the parallel to “AI‑powered personalisation” should feel uncomfortably close: the same instinct to sell the tool as smart but frictionless, powerful yet never messy.[ppl-ai-file-upload.s3.amazonaws]

We have already watched brands lean on visual sleight of hand in the name of “wow” – from floating mascaras over Mumbai metros to CGI‑polished cityscapes – and then act surprised when people question what is real.[ojp]

“We’ve asked the recommender system that once nudged conspiracy videos to now moonlight as a forensic psychologist.”

4. Surveillance as comfort: the new face of “brand safety”

This is where the film stops being just Meta’s business and becomes ours.

At its core, the video mainstreams a feeling: that continuous content scanning, pattern analysis and sharing signals with law enforcement is not only acceptable but desirable. It tries to make pervasive surveillance feel like a warm blanket.[ppl-ai-file-upload.s3.amazonaws]

Law enforcement partnerships as a selling point

Platform–police cooperation has grown steadily for years, as more crime, extremism and everyday investigation moves online. There are situations where quick access to digital evidence can clearly save lives or solve serious crimes. Consequently, few people argue that online spaces should be totally sealed off.lex-localis+1

However, how we narrate that cooperation shapes what else becomes thinkable. In this film, we see only:

- Averted mass violence,

- Big‑city police departments,

- Clear villains and relieved families.[ppl-ai-file-upload.s3.amazonaws]

Meanwhile, we do not see:

- Cases where a teenager becomes a suspect because of a misinterpreted joke.

- Jurisdictions where “threat” can also mean activism, satire, or inconvenient journalism.

- Any discussion of oversight, appeals, or proportionality.[lex-localis]

For audiences in India and other parts of the global South, this framing has sharp edges. We’ve already seen “law and order” rhetoric justify internet shutdowns, protest crackdowns, and broad data grabs. In those settings, super‑charged digital surveillance tools can be turned not just on would‑be shooters, but on student organisers, Muslim communities, Dalit activists, or queer collectives.[lex-localis]

The film never acknowledges that dual use. Because the targets in this story appear unambiguous, the underlying apparatus is presented as neutral.[ppl-ai-file-upload.s3.amazonaws]

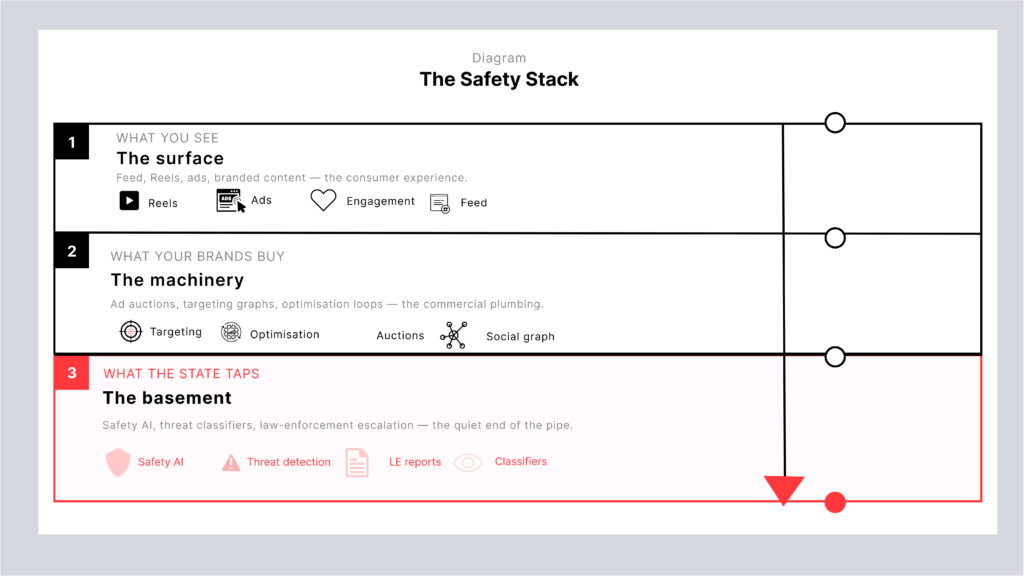

Your media budget in the surveillance stack

From a marketing perspective, that’s the part we’d rather not think about. It is easy to see Meta as just a channel and treat this work as unrelated “corporate responsibility”. That, frankly, is naive.

Whenever the same AI infrastructure:

- decides which posts get promoted,

- assesses which content is “brand safe”, and

- scores whether a user seems like a potential threat,

- your media budget sits inside that system. It helps pay for the stack. It benefits from the same surveillance capacity that now doubles as public safety infrastructure.linkedin+1

That does not mean you should pull all your spend tomorrow. However, it does mean you should stop pretending media buying is a morally neutral act.

We already know how fragile trust becomes when the surface feels too perfect and the seams start to show.

“Your media budget isn’t just chasing reach. It’s quietly underwriting whatever the platform has decided ‘safety’ now means.”

5. Why marketers can’t look away

Most marketers, if they see this video at all, will probably file it under “nice to know” and go back to CPMs. That reflex is understandable and wrong.

Values on the wall, data in the wild

If your brand talks about mental health, youth empowerment, digital wellbeing or inclusion – and many Indian and global brands now do – then this matters.

Research on school shootings and leakage shows that many perpetrators were in crisis, and that leaked intent often overlapped with suicidality. Threat assessment professionals argue that early signals should trigger support structures, not just punishment. One CDC‑linked chapter on K‑12 school shootings stresses the danger of seeing children only as “perpetrators to be punished or victims to be protected”, rather than as agents who need skills, support and a sense of safety.ed+3

The film collapses that complexity. It presents a world divided into attackers, innocents, and the institutions that watch over them. Unsurprisingly, that is a strange fit for brands that claim to champion young people’s agency and wellbeing.[ppl-ai-file-upload.s3.amazonaws]

Performance on a cop‑adjacent platform

There’s a structural point too. Platforms are morphing from media businesses into hybrid infrastructures: identity layers, payment layers, communications layers, and increasingly, safety layers.linkedin+1

In India, where digital public infrastructure, Aadhaar‑linked services and data sharing debates already blur public and private roles, this shift is not abstract. When Meta leans into the role of quasi‑public safety partner, it inches closer to that infrastructure category.[lex-localis]

If you invest heavily in such platforms, you participate in that shift. Again, the answer may still be “yes, this is worth it”, but it should be a conscious yes – not an accidental by‑product of Q4 optimisation.

The same way a supposedly harmless “musical break” can worm its way into our heads, platforms’ safety narratives can slip into the background until we stop noticing them.

“If a jingle can colonise your brain, a safety story can colonise your sense of what’s normal.”

6. How to adjust your practice without becoming a full‑time ethicist

Let’s bring this down from the clouds.

You probably have limited time, a busy team, and numbers to hit. You do not need to become an expert in threat assessment or constitutional law. However, you can tweak how you operate.

1. Ask better questions in platform reviews

The next time you sit down with a platform or your media agency, add a short, concrete checklist.

- How does your safety AI work in principle – what types of signals does it consider?

- How do you measure false positives, especially in non‑English languages and non‑US markets?

- What transparency exists around law enforcement requests and escalations?

Of course, you will not get full detail, but asking the questions shifts the norms in the room. It flags that “safety” claims will not pass unchecked.

2. Align ESG decks with media plans

Many brands now publish cheerful PDFs about “ethical AI”, youth wellbeing and inclusion. These documents rarely talk to the media plan.

You can link them.

If your ESG or CSR commitments talk about mental health or human rights, ask whether you need minimum transparency standards from platforms on safety and policing. You might also set internal triggers: for example, you will not increase spend on a platform that refuses to publish basic data on government requests or safety tooling.everytownresearch+2

No one expects perfection. Yet the gap between values‑talk and buying‑behaviour does not have to be quite so wide.

If a biscuit can carry a love letter, a media plan can carry a conscience.

3. Diversify away from pure surveillance‑media dependence

There are few large‑scale “ethical” alternatives with comparable reach. That’s the hard reality. Still, you can rebalance.

For one, invest in smaller, community‑driven spaces where engagement is more relational and less algorithmically optimised: newsletters, membership communities, moderated Discord or WhatsApp groups. In addition, support local mental health or digital rights organisations that respond to online risk with care, not just criminalisation – especially those working with young people.pmc.ncbi.nlm.nih+1

You cannot boycott your way to a better internet alone. Nevertheless, you can avoid outsourcing every last piece of your brand’s public presence to surveillance‑heavy infrastructures without comment.

7. Why this particular film, now?

The timing of this kind of content is not random.

Regulators in the EU, US and elsewhere are turning up the heat on social platforms. At the same time, youth mental health and online harm debates have gone mainstream. Meanwhile, AI has moved from hype to infrastructure: it already moderates content, targets ads and now, in Meta’s telling, helps stop school shootings.stacks.cdc+2[ppl-ai-file-upload.s3.amazonaws]

In that environment, a video like this works on several fronts.

It reassures regulators that Meta is a public safety partner, not just an ad factory. It also calms parents and voters who live in a news cycle punctuated by school shootings. Finally, it prepares the public for deeper, more seamless integration between platform AI and law enforcement databases.ojp+1

The question is not whether stopping shootings is good. It is. The question is whether we allow this uncritical safety narrative – ex‑FBI protagonist, AI as quiet hero, users as silent backdrop – to become the default way we talk about tech power.[ppl-ai-file-upload.s3.amazonaws]

Marketers often arrive late to these conversations, because quarterly numbers shout louder than governance. That luxury is fading. We are, quite literally, paying for the system. We help decide which stories of platform power are rewarded, and which are challenged.[linkedin]

“You’re not just buying impressions. You’re voting for a version of the internet.”

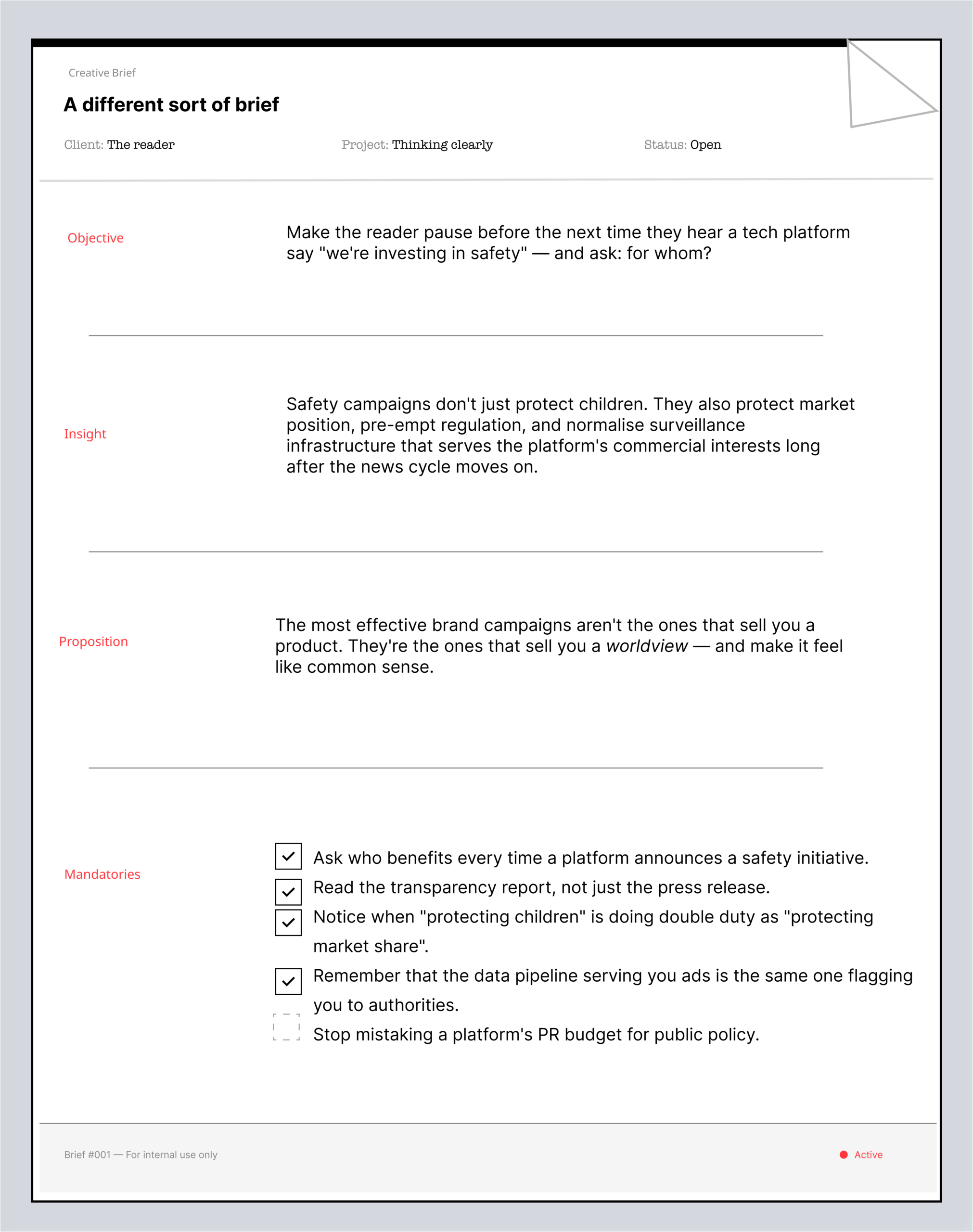

8. A different sort of brief

If you’ve read this far, you probably feel that odd blend of unease and pragmatism that defines most adult relationships with the internet. So let’s end with a brief – not for Meta, but for you.

- Objective: Operate in digital spaces that keep people safe without treating everyone as a suspect or a segment.

- Insight: Safety stories are never neutral. They encode views about who is dangerous, who is credible, and who gets to do the watching.

- Proposition: Our brand does not just avoid “bad content”; it has a point of view on what dignified digital life looks like – for our customers and the young people around them.

Mandatories:

- Ask platforms specific questions about safety AI and law enforcement partnerships.

- Treat those partnerships as governance issues, not just nice‑to‑have PR bullets.

- Stop pretending media buying sits outside politics. It doesn’t, and everyone under 30 knows it.

Meta’s latest film tells you that AI and ex‑FBI staff are on the case. Good. Let them be. However, do not mistake that for a complete answer to what a healthy digital society requires.

That part still needs regulators, teachers, parents, young people – and, whether we like it or not, the people who decide where the ad money goes.

Footnotes

- Meta video – Meta’s Early Detection and Disruption Work, YouTube. External source.[ppl-ai-file-upload.s3.amazonaws]

- Leakage and communication of intent – Jillian K. Peterson et al., “Communication of Intent to Do Harm Preceding Mass Public Shootings in the United States, 1966 to 2019”, JAMA Network Open (2021). External source.pmc.ncbi.nlm.nih+2

- Social networks and mass shooting patterns – Article on social network analysis and mass shootings, Social Networks (forthcoming, Elsevier). External source.[sciencedirect]

- K‑12 school shootings and policy – James N. D. et al., “K‑12 School Shootings: Implications for Policy, Prevention, and Child Well‑Being”, CDC‑linked report (2021). External source.pmc.ncbi.nlm.nih+1

- Federal research on mass public shootings – US National Institute of Justice, “Public Mass Shootings: Database and Research” special report (2021). External source.[ojp]

- Threat assessment and leakage concept – Reid Meloy, “The Concept of Leakage in Threat Assessment”, threat assessment literature. External source.[drreidmeloy]

- School safety and prevention measures – Everytown for Gun Safety, “How Can We Prevent Gun Violence in Schools?” (2024). External source.[everytownresearch]

- Platform–law enforcement collaboration – “The Digital Synergy: How Collaboration Between Law Enforcement Agencies and Online Service Providers Fights Modern Crime”, LinkedIn / policing analysis (2024). External source.[linkedin]

- Law enforcement, platforms and governance challenges – “Challenges and Opportunities for Law Enforcement” (Lex Localis, 2025). External source.[lex-localis]

- Threat assessment in schools guidance – US Secret Service / Department of Education, Threat Assessment in Schools: A Guide to Managing Threatening Situations and to Creating Safe School Climates. External source.[ed]

- Internal site – Algorithmic brand storytelling – “Brand Anthem in the Age of Algorithms: Swiggy Wiggy 3.0 Campaign and the Playbook for People‑Powered Marketing”, on suchetanabauri.com. Internal source.

- Internal site – Spectacle and visual trust – “The Pixel‑Perfect Lie: Maybelline’s Mumbai Mirage”, on suchetanabauri.com. Internal source.

- Internal site – Attention loops and musical breaks – “Break the Loop, Mind the Bump: A Wry Audit of KitKat’s Latest Musical Break”, on suchetanabauri.com. Internal source.

- Internal site – Intimacy and brand storytelling – “When a Biscuit Became a Love Letter”, on suchetanabauri.com. Internal source.

- Performance metrics file – “Post‑launch Performance Metrics” (Metric‑Post‑launchLift.csv), internal analytics file used as a placeholder reference for uplift and campaign framing. Internal source.[ppl-ai-file-upload.s3.amazonaws]