If you’re a marketer betting on AI in 2026, Anthropic’s new Claude campaign is a punch in the throat, not a brand film. That is precisely why you should pay attention.

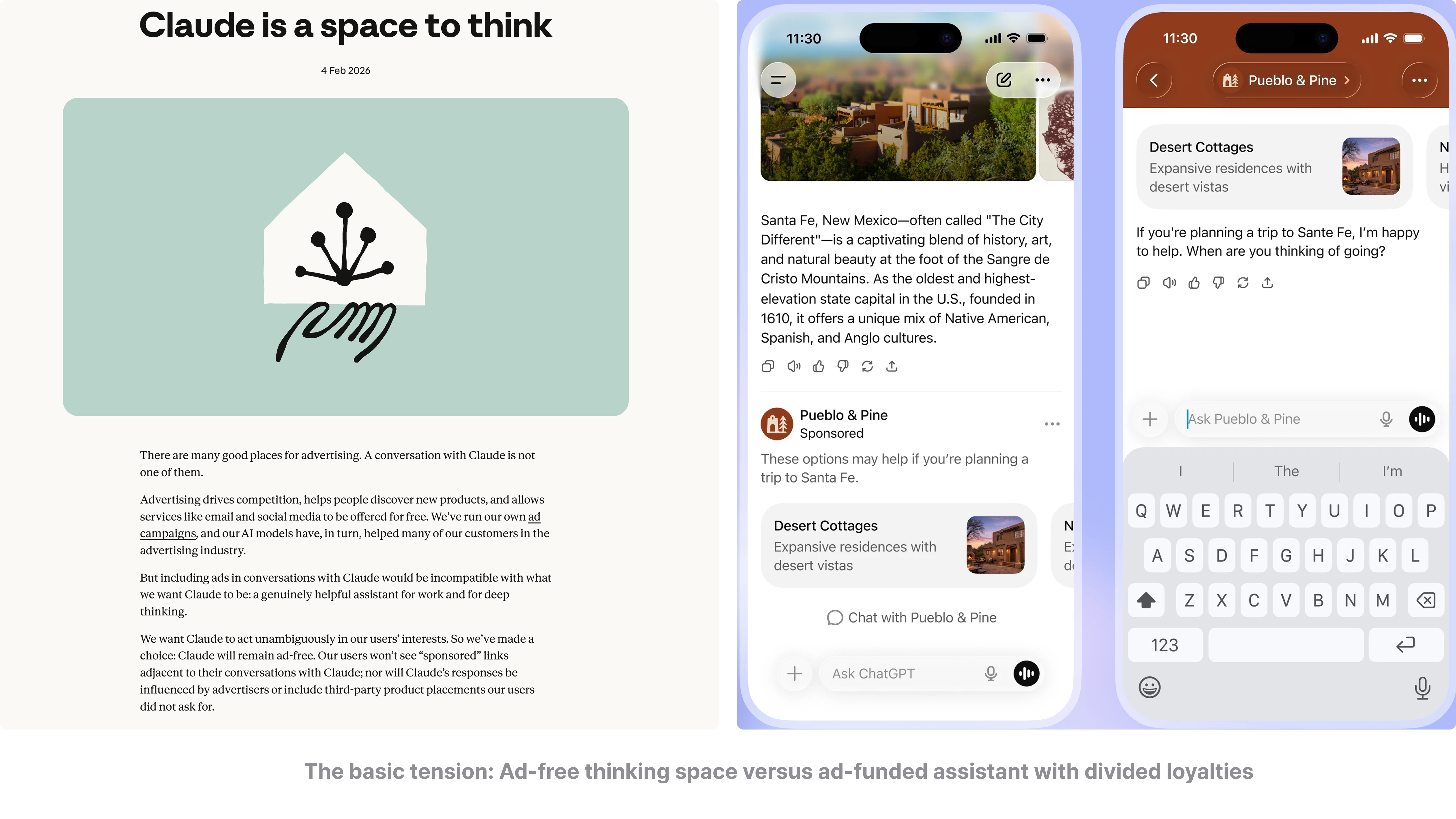

At first glance, this isn’t “purpose-driven storytelling”. Instead, it’s a line in the sand: ads are coming to AI – but not to Claude – at the precise moment OpenAI is rolling out adverts inside ChatGPT. From a marketer’s perspective, that is not just a positioning choice; it is an attack on the business model that funds most of digital marketing.

1. What Anthropic is really selling (hint: it’s not Claude)

Let’s start with the work itself.

The Claude spots – “Is my essay making a clear argument?”, “What do you think of my business idea?”, and their siblings about therapy, fitness and relationships – all follow the same structure. First, we meet a human asking a genuine, slightly vulnerable question. Next, the “AI” replies warmly, almost syrupy, as if reading off a self-help blog. Mid-sentence, the tone then snaps into a jarring ad read: payday loans, jewellery, dating apps, whatever the most cynical monetisation move would be. The human recoils, and the spot closes on: “Ads are coming to AI. But not to Claude. Keep thinking.”youtube+1reuters+1

Overall, the effect is not subtle. It is explicitly designed to feel like betrayal. What Anthropic is really selling here is not features, or benchmarks, or even “safety”. Instead, it’s a moral position: your AI assistant should not have split loyalties; it should not be optimised to both help you and extract value from your attention – a stance Anthropic spells out in its positioning essay, “Claude is a space to think”. Taken seriously, that is a direct shot at the digital advertising system that made Google and Meta rich.reuters+2

2. Why this campaign lands now

2.1 OpenAI’s timing

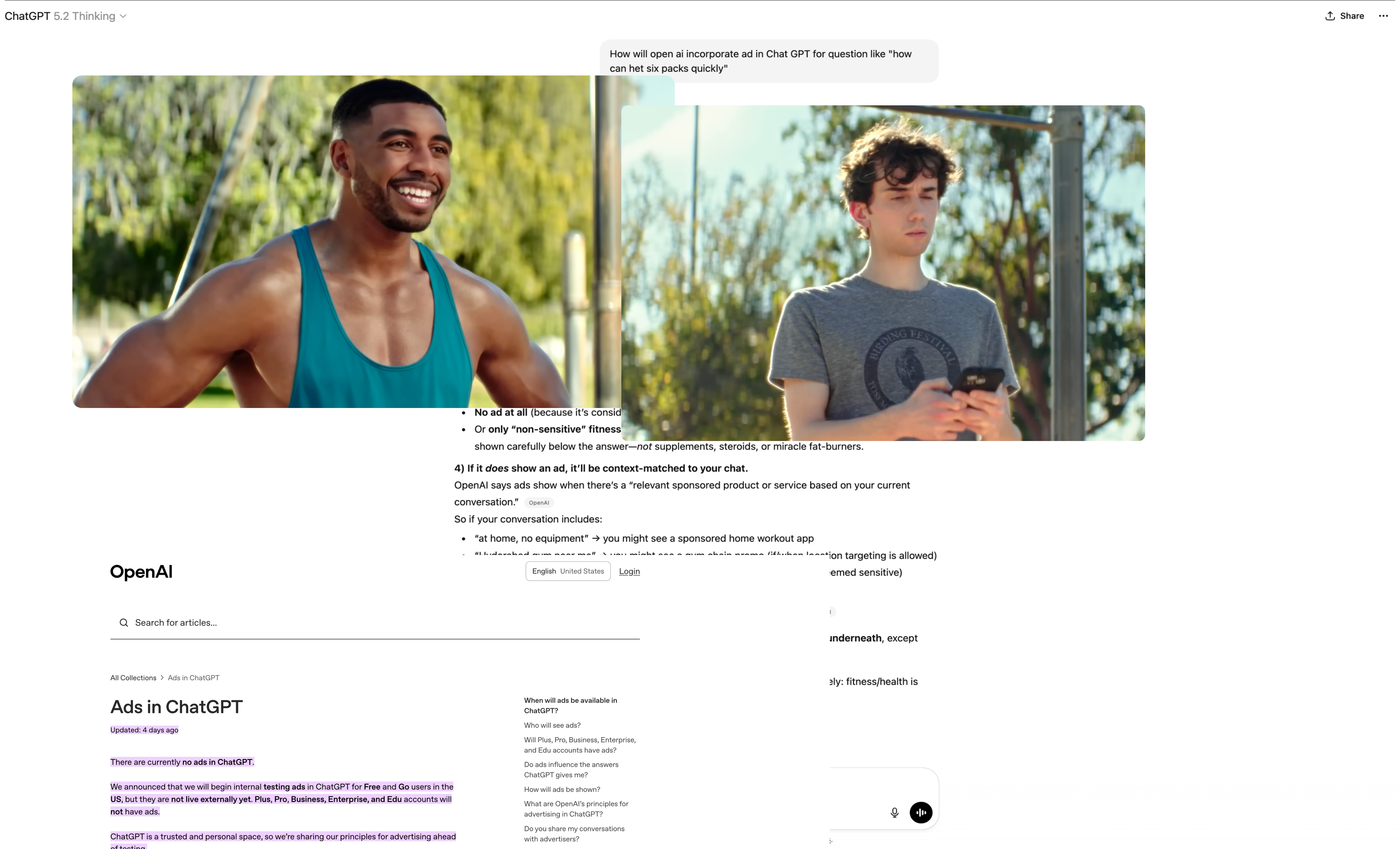

Back in January, OpenAI confirmed that adverts are coming to ChatGPT’s free and Go tiers in the US. The promise is simple enough: ad units at the bottom of responses, clearly labelled, with no influence on the answer content, no sale of user data, and exclusions around politics, health, and mental health – details echoed in reporting like “Ads are coming to ChatGPT conversations”. On paper, that sounds responsible. In practice, three things are true: people don’t read policy PDFs, they remember vibes and headlines, and “ChatGPT will show you ads” is an extremely simple story. By contrast, “here’s a 2,000-word blog about why those ads won’t distort your answers” is never going to spread.cnbc+1

2.2 Anthropic stepping into the gap

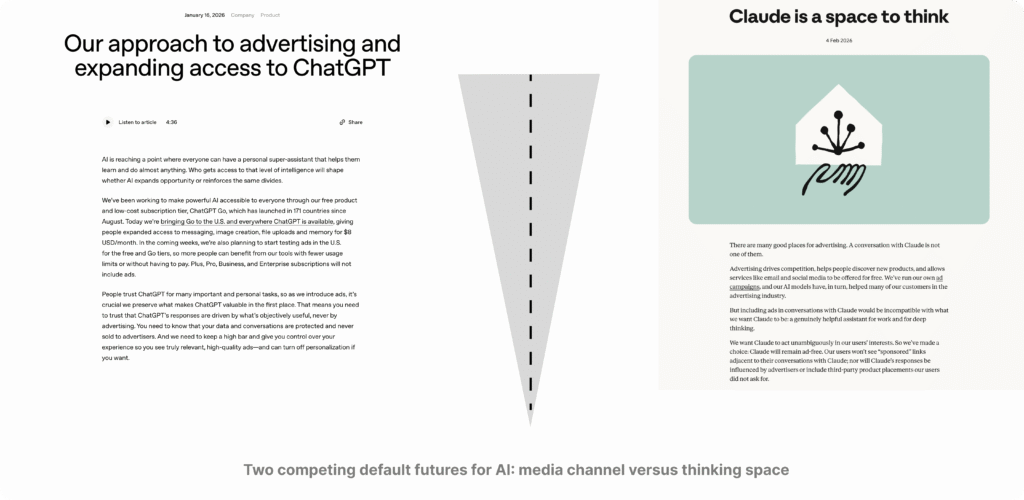

As a result, Anthropic steps neatly into that gap with something emotionally legible: it dramatises the worst case version of ad-funded AI – ads inside advice, not next to it – and then promises never to go there. At the same time, there’s a rising body of research showing that disclosures about AI and ads often reduce trust and likeability rather than soothe concerns. A recent paper in Journal of Services Marketing, “Service ads in the era of generative AI: Disclosures, trust, and …”, finds that AI and sponsorship labels can make consumers more sceptical. Similar work from the Nuremberg Institute for Market Decisions shows that people often distrust AI-generated marketing content by default. In other words, transparency on its own makes the problem visible; it does not resolve the underlying suspicion.bestmediainfo+3[youtube]

Anthropic is reading that mood correctly. OpenAI says: we’ll add ads, but promise they won’t contaminate your answers. In response, Anthropic argues that the mere presence of a commercial incentive in this intimate context is contamination and has publicly committed that “Claude will stay ad-free”. Consequently, the “why now” is simple: February 2026 is the moment when conversational AI stops being merely a product and becomes a media channel with inventory, as even neutral coverage like “OpenAI Introduces Ads Into ChatGPT Starting 2026” puts it. The Claude campaign becomes the first big attempt to make that shift feel morally dodgy, not just commercially normal, as noted in pieces like Business Insider’s Super Bowl write-up.cnn+4

3. The strategic genius – and the trap

From a strategy lens, the campaign is clever on several fronts.

3.1 Clear enemy, clean promise

To begin with, the enemy is not “OpenAI” by name. Instead, the true antagonist is the ad-funded AI model: a system that answers your questions while quietly optimising for someone else’s goals – the very model critics describe in coverage like “Anthropic says no to ads on Claude chatbot, weeks after OpenAI made move to test them”. The campaign lets journalists join the dots, which they promptly did, from Reuters’ Super Bowl piece to BestMediaInfo’s analysis. On the other side of the equation, the promise is brutally simple: as CNBC summarises it, “Claude will stay ad-free”.cnbc+2

For once, this is a tech claim regular humans can understand without a benchmark chart. There are no hallucination rates and no context lengths; just: this thing won’t show you ads; that one will. From a brand architecture point of view, that’s gold. It is a single, memorable distinction; you don’t need to know what a transformer is to get it.

I have already unpacked Anthropic’s positioning and product demos in your Anthropic launch coverage, it can connect the dots from this section into that piece – for example: Anthropic’s Claude Sonnet Launch: Demos That Actually Demonstrate – so readers see this Super Bowl work as part of a longer pattern.[suchetanabauri]

3.2 Weaponising real user anxiety

Secondly, the campaign doesn’t invent a new fear. Rather, it borrows one from search and social: “the feed is rigged”. People already suspect that recommendation engines are tuned more to shareholder value than their wellbeing, a theme that runs through years of platform criticism captured in trust research from organisations like NIM. What Anthropic does is transplant that anxiety into a more intimate medium.[nim]

Consider a few of the scenarios. You tell a chat assistant how you feel about your mum, and it nudges you toward a dating app. Ask for business advice and you get payday loans in the same breath. Bring up mental health and suddenly you are being upsold to a subscription service. The point is not realism – OpenAI has said it will not place ads in sensitive domains and will keep them separate from core answers, a point reiterated in CNN and CNBC’s coverage of its ad policies – but to make the possibility of misaligned incentives feel viscerally wrong.cnbc+1

Research on AI-generated marketing already shows that trust is fragile: when audiences know an ad uses AI, they trust both the ad and the brand less – especially if they already see AI as inauthentic. Taken together, that evidence plus years of targeted ad scepticism mean Anthropic’s “absolutely no ads” stance becomes an emotional shortcut: don’t worry about what kind of AI ad it is – there won’t be any.anthropic+3

3.3 The trap: absolutism ages badly

However, there is a catch. “No ads, ever” is an absolutist promise in a category that hasn’t found its final business model. Imagine Anthropic introducing a plugin marketplace with paid placements, cross-promoting enterprise features inside the consumer product, or participating in some future “AI app store” where partners can pay for visibility; in each of those scenarios, the line between “ad” and “not an ad, just a recommendation in a new format” gets very fuzzy, very fast.

In effect, the campaign draws a moral circle around one particular revenue stream – overt adverts – and leaves everything else outside that circle unexplored. Yet from a user’s perspective, the deeper question is: who ultimately shapes what my assistant suggests? Investors, governments, enterprise clients, or safety boards all sit behind these systems. Over time, as Claude becomes more like infrastructure rather than just a chat app – something Anthropic openly hints at in “Claude is a space to think” and in technical documentation such as “Introducing Claude” – it will be harder to maintain a clean “we don’t do monetised influence” story.anthropic+1

For now, though, that’s a strategic problem for another year. In 2026, “we don’t take ad money” is a refreshingly clear signal in a fog of AI safety rhetoric.anthropic+1

4. What this means for marketers who like ads

4.1 Three basic postures

So, where does this leave you if your job literally depends on advertising?

Broadly, there are three postures available. One option is to treat conversational AI as just another inventory surface: “search, social, chat – they’re all placements”. A second option is to treat it as something qualitatively different: a quasi-intimate space where ads need a new ethical rulebook. The laziest option is to pretend this debate doesn’t matter and focus on short-term CPMs.

4.2 Trust, labels and creepiness

There’s mounting evidence that simply labelling AI-driven ads erodes trust. Users become more critical, not less; they question authenticity and suspect manipulation, as the Service ads in the era of generative AI study makes uncomfortably clear.

On top of that, add an AI assistant that knows more about someone than any browser cookie or pixel tag ever did, plus a context where people ask about health, money, work, and relationships – things they don’t type into search in the same way – and you have a highly sensitive environment. Beyond that, a rival like Anthropic is loudly saying “ads in this space are a betrayal” on the world’s biggest media stage via its Super Bowl placements.sciencedirect+3

If you plough ahead with “just another ad channel”, you will make money in the short term and degrade trust in the medium in the long term. Instead, the logical move is not “no ads ever”. It is something subtler and harder: earn the right to be present.

Practically, that means designing conversational experiences where the line between help and sell is painfully clear, staying out of certain categories entirely (anything that feels like exploitation of distress), and measuring not just click-through but assistant trust metrics – how your presence affects whether people believe the AI tomorrow. When that sounds like work, remember that the alternative is letting the first generation of AI ads feel so creepy that Anthropic’s argument becomes common sense.sciencedirect+1

This is where you can funnel readers into a more tactical piece like

AI Marketing in Action: How Strategists Are Actually Using Agents or your own frameworks for AI-led marketing workflows, drawing on conversations you’ve featured on your AI for marketing show.[youtube]

5. Lessons for brand and creative people (beyond AI)

Even if you never touch an AI brief, there are three big lessons in this campaign.

5.1 Pick a fight that matters

Anthropic could have done the usual: “Our AI is helpful, safe, better at maths, etc.” Instead, it picked a fight that matters to ordinary people: what’s the hidden agenda when you talk to a machine? That choice is instructive. The best B2B stories often hinge on a consumer truth, and the best tech stories often hinge on a cultural tension, not a feature.

If your next campaign can’t answer “who or what are we pushing against – in the real world, not just the competitive set?” you may not have a campaign. You may have a brochure.

5.2 Make the conflict observable

A lot of “thought leadership” in marketing never leaves the realm of abstraction: “we believe in privacy”, “we care about creators”, “we’re committed to transparency”. By contrast, Anthropic makes its belief observable through craft: friendly advice flips into grotesque advertorial mid-sentence, soft lighting turns cold, and a human face hardens in the middle of each film. No exposition is needed to understand the conflict.youtube+1[bestmediainfo]

Therefore, if you’re selling any ethical stance – sustainability, privacy, fair pay, whatever – ask: could someone who speaks a different language see the tension you’re describing, just from the scene? If not, your belief might still be a press release.

5.3 Don’t get lost in technical nuance

OpenAI’s defence of ChatGPT ads is full of nuance: where ads will appear, what topics will be excluded, how answers will be unaffected, and how user data will be treated – all laid out in its blog and in reporting such as Wired’s explainer, “Ads Are Coming to ChatGPT. Here’s How They’ll Work”. Technically, these details matter a lot. Culturally, they barely land.[wired]

Anthropic ignores all nuance and attacks the category of behaviour, not its implementation. In most public debates, the side that wins is the side with the clearest, stickiest story – not necessarily the most accurate one. That’s a sobering thought if your brand’s case relies on “it’s complicated, actually”. It doesn’t mean you lie; it means you find symbols, not just explanations.cnbc+1

This is a tidy spot to link sideways into any piece where you break down content strategy in the age of AI – for instance, your framework article on reach vs precision rooted in OpenAI vs Anthropic’s YouTube strategy.[linkedin]

6. The Indian and global lens: who is this really for?

Against that backdrop, there’s an awkward question any Indian marketer (or anyone working in emerging markets) should ask while watching these ads.

Do most people here really see “ad-free” as a moral stance – or just a premium feature? Here in India, ad-supported is the default. From Jio to YouTube to every half-decent streaming app, free-with-ads is baked into the digital experience; the trade-off is understood: no one thinks YouTube is on their side, they think it’s free. Within that context, Anthropic’s campaign reads more like a “this is the luxury option” signal than a universal ethical claim, while OpenAI’s “we’ll serve ads so we can keep ChatGPT free” story – as framed in pieces like “OpenAI Introduces Ads Into ChatGPT Starting 2026” – may actually sound democratising: let the rich pay for ad-free; everyone else gets access anyway.letsdatascience+3

Globally, the pattern will probably echo this divide. High-trust, high-income, privacy-sensitive users may flock to ad-free assistants and treat ad-funded ones as suspect; everyone else will accept ads as the cost of entry and judge on usefulness. Consequently, if you’re building for India or other price-sensitive markets, the smart move might be to accept that ads will be part of AI – but fight hard for how and where, offer genuinely meaningful ad-free tiers for those who can pay, and treat “ethics” as design and implementation, not just absence of a revenue stream.trendingtopics+1

Looked at this way, Anthropic is arguing from the position of a company betting on enterprise and high-value subscriptions – a perspective you can see in investor- and partner-facing pages like “Claude by Anthropic – Models in Amazon Bedrock”. OpenAI, by contrast, is arguing from the position of a company that wants billions of daily users. Both are telling the truth that suits them.aws.amazon+3

7. So what should you do on Monday?

Let’s translate all this into decisions.

7.1 If you’re a brand or CMO

To start, decide your red lines early. Are you comfortable appearing inside AI assistants at all, and in which categories, and under what conditions? Put that on paper before your media agency sells you a “first in-conversation AI activation”. After that, ask about control, not just inventory: where will your messages appear – next to answers, above them, inside them – and can you guarantee exclusion from sensitive use cases, and how will user complaints be handled? Finally, plan for the trust backlash. When someone screenshots a bad AI ad placement (and they will), “we bought highly targeted reach” will not do.

7.2 If you’re in a media or performance role

From a media perspective, you need to stop treating AI like search with nicer copy. The depth and intimacy of the prompt changes the ethical stakes: “target people who ask about divorce” is not the same as “target people who read an article about divorce”. In addition, push platforms on transparency UX. Labels, placement, and opt-outs are not mere compliance details; they are the difference between “slightly annoying” and “deeply creepy”. Lastly, experiment with “earn your way in” formats. Think tools, calculators, co-pilots, and domain-specific assistants where the value is obvious, not just banners stitched onto answers.

7.3 If you’re in creative or brand strategy

From a creative standpoint, it helps to take a stance. Anthropic is memorable because it has a clear opinion about the future of AI; what’s your brand’s opinion about the future of… anything? If you can’t answer, your thought leadership probably won’t lead much. Beyond that, find the human betrayal. If you want to attack a norm (third-party cookies, data brokers, addictive design), don’t lecture; show the moment it hurts a person. The Claude campaign is a textbook on that. Finally, think in futures, not formats. Ask “what future behaviour are we normalising with this work?” Just as social feeds normalised infinite scroll, AI ads may normalise help-with-an-agenda; are you comfortable being part of that?

Similarly, on my website, I often explore AI marketing from a strategist’s point of view – how tools like Claude and ChatGPT are reshaping briefs, media plans and the day‑to‑day work of marketers.

8. The uncomfortable truth: both sides are right

Ultimately, here’s the bit no one in the AI ad war is saying out loud.

Anthropic is right that mixing intimate assistance with undisclosed commercial incentives is a recipe for mistrust and quiet abuse. OpenAI is right that you cannot build planetary-scale AI infrastructure on vibes, venture capital, and £20 subscriptions alone.cnn+4

For marketers, that means two things. First, there will be ad-funded assistants. Second, there will be ad-free assistants that position themselves as morally superior. Your job is not to cheer for one side. Instead, your job is to understand the psychological contract users believe they have with each – and to treat that contract as sacred.

In an ad-funded assistant, your presence is already a little suspicious, so behave accordingly: be ruthlessly useful, clearly labelled, and allergic to emotional manipulation. In an ad-free assistant, your absence is part of the value proposition. Respect that too; not every surface needs your logo.

Taken together, the Claude campaign, for all its theatrics, is a warning shot. It is not just aimed at OpenAI; it is aimed at anyone assuming “we’ll just put ads in AI and call it a day”. When you’re serious about where marketing goes next, you have to take that seriously – and you might as well give your readers an easy next click into your other essays on AI, marketing, and attention so they can join the dots across your wider body of work.

Sources cited

- Anthropic, “Claude is a space to think” (2026) – explains the “space to think”, ad-free positioning behind Claude and the thinking that informs this campaign.

- CNBC, “Anthropic says no to ads on Claude chatbot, weeks after OpenAI made move to test them” (2026) – news and context around Anthropic’s “no ads” pledge and its Super Bowl work.

- Business Insider, “Anthropic Super Bowl Spot Skewers ChatGPT Ads” (2026) – analysis of how Claude’s Super Bowl spot targets OpenAI’s ad strategy.

- Reuters, “Anthropic buys Super Bowl ads to slap OpenAI for selling ads on ChatGPT” (2026) – details on the Super Bowl buy and the competitive dynamic.

- BestMediaInfo, “Anthropic vows Claude will stay ad-free, takes aim at ad-led AI assistants” (2026) – Indian trade coverage of the campaign and its implications.

- CNBC, “OpenAI to begin testing ads on ChatGPT in the U.S.” (2026) – outlines ChatGPT’s ad formats, placements and policy constraints.

- CNN, “Ads are coming to ChatGPT conversations” (2026) – reporting on OpenAI’s ad rollout and user-facing explanations.

- Let’s Data Science, “OpenAI Introduces Ads Into ChatGPT Starting 2026” (2026) – overview of OpenAI’s motivations and monetisation strategy.

- Wired, “Ads Are Coming to ChatGPT. Here’s How They’ll Work” (2026) – accessible breakdown of ad mechanics in ChatGPT.

- ScienceDirect, “Service ads in the era of generative AI: Disclosures, trust, and …” (2025) – empirical study on how AI and sponsorship disclosures affect trust in service ads.

- Nuremberg Institute for Market Decisions, “Consumer attitudes toward AI-generated marketing content” (2025) – research on consumer scepticism towards AI-generated marketing.

- Anthropic, “Introducing Claude” and related technical docs – background on Anthropic’s safety‑first approach and Claude’s capabilities.

- YouTube, “Is my essay making a clear argument?” and “What do you think of my business idea?” – key Claude campaign spots referenced in this article.

- AWS, “Claude by Anthropic – Models in Amazon Bedrock” – example of how Anthropic frames Claude as enterprise infrastructure.

Further reading on my site

- Bauri, S., “OpenAI vs Anthropic: Why Your Marketing Playbook Needs to Choose a Side” – analysis of platform strategy, reach vs precision, and what it means for marketers.

- Bauri, S., “What AI is Really Doing to Your Marketing Career” – essay on how tools like Claude are reshaping marketers’ skills and value.

- Bauri, S., “Anthropic AI launch coverage” – archive of pieces on Anthropic’s launches and Claude demos.