Why the most inspiring AI success stories might be setting up beginners for failure

On 15 January 2026, Google for Developers uploaded a video that has since accumulated over 1,200 likes and is gaining momentum across developer communities. With thousands of views and dozens of glowing comments, the polished narrative is resonating—and potentially misleading aspiring developers at scale. It tells the story of Aniket Chandekar, a UX designer from Hyderabad who learned Android development, built a smart home app called “Pulse,” and won Google’s Home APIs Developer Challenge—all whilst discovering the “magic” of Gemini AI as his teacher.

The production is immaculate: sweeping B-roll of Hyderabad and San Francisco, emotional music, an arc from struggle to triumph. Moreover, the message lands with force: anyone can be a game changer. You just need your passion to create.

There’s only one problem. The timeline doesn’t add up—and the gap between what the video shows and what the research reveals could leave thousands of aspiring developers frustrated, disillusioned, and blaming themselves for failing to replicate an impossible standard.

The Two-Week Miracle That Wasn’t

At timestamp 1:20 in the video, Aniket states something specific: “I had this time limit of two to three weeks where I had to learn this new skill sets.” He’s referring to mastering Android development from complete beginner—learning Kotlin (Android’s primary programming language), understanding development fundamentals, integrating Google Home APIs, and implementing emotional AI features for his “Pulse” application.

Furthermore, he emphasises the starting point at timestamp 1:53: “Coming from a UX design background, I didn’t have any development knowledge on how to build an Android application.”

Yet later in the video (2:20-2:22), he admits: “I’m still working on it. I’m running into some errors.” He continues (2:27-2:32): “Maybe at the end of this hackathon I would be able to complete the project and hopefully people will like it.”

Two to three weeks for skill acquisition. Zero development experience. An app that remained incomplete even at submission. Challenge victory.

“The gap between what the video shows and what the research reveals could leave thousands of aspiring developers frustrated, disillusioned, and blaming themselves for failing to replicate an impossible standard.”

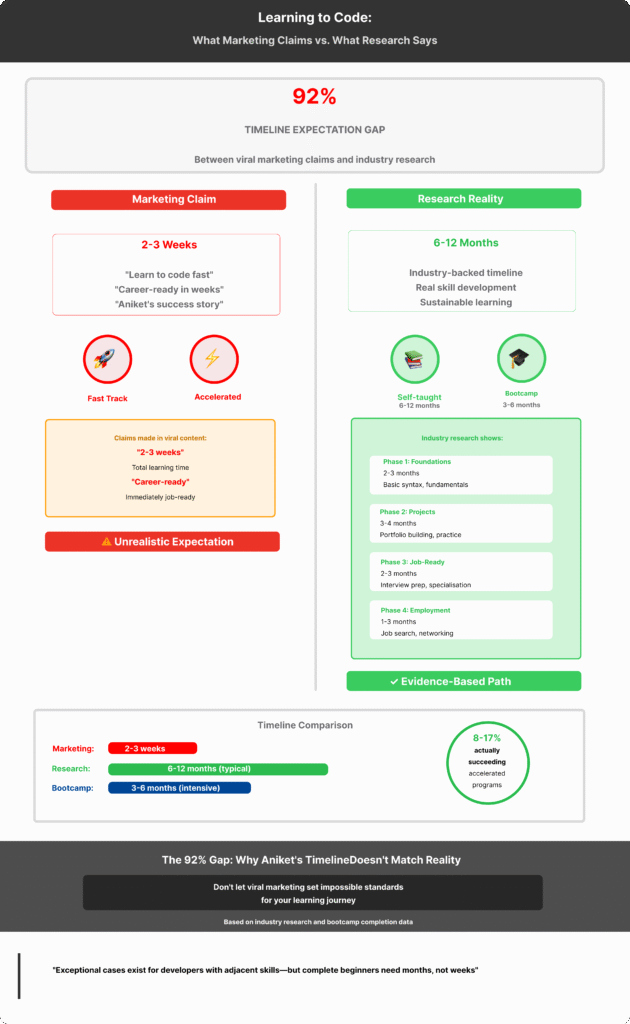

It’s the kind of timeline that makes coding bootcamps look glacial. However, it’s also—according to every credible source on software education—fundamentally unrealistic.

What the Research Actually Says

When you survey the landscape of coding education—from intensive bootcamps to self-taught developers to university programmes—a consistent timeline emerges:

- Coding bootcamps (structured, full-time, professionally guided): 3–6 months to job-ready competency

- Self-taught developers (part-time, using online resources): 6–12 months for basic proficiency

- Accelerated self-study (full-time focus without formal structure): 4–6 months for fundamental skills

Even these timelines assume you’re learning a single programming language with basic framework exposure. In contrast, Android development adds layers: architecture patterns like MVVM, API integration with Retrofit, database management (Firebase or SQLite), UI implementation in XML or Jetpack Compose, state management, and testing protocols.

Additionally, Kotlin specifically has a “steeper learning curve for complete beginners”, according to JetBrains’ comprehensive learning guide. Their own beginner pathway assumes months of consistent study.

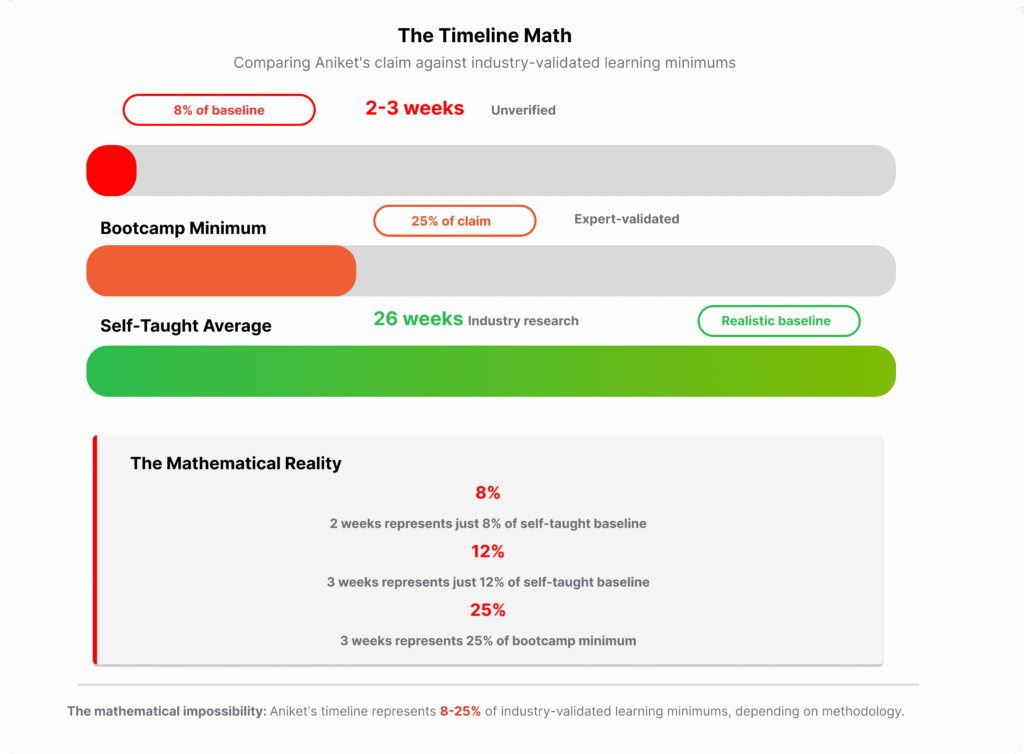

“Aniket’s claimed two-to-three-week learning timeline represents 8–17% of expert-validated minimums. That’s not ‘fast’. That’s not even ‘accelerated’. That’s mathematically implausible.”

Put bluntly: even using the most generous interpretation—three weeks against the absolute minimum bootcamp timeline of 12 weeks—that’s 25% of expert-validated minimums. Using the more realistic six-month self-taught baseline? That drops to roughly 8–10% of required learning time.

That’s not “accelerated learning”—it’s mathematical impossibility, especially since the video itself shows him stating “I’m still working on it” mid-challenge.

Could someone with adjacent technical skills—a web developer learning mobile, or a UX designer with HTML/CSS/JavaScript experience—build a functional prototype in 2-3 weeks of intensive study? Possibly. But the video positions Aniket as having ‘no development knowledge,’ which either misrepresents his background or conflates ‘basic functional prototype’ with ‘production-ready application.’ Either way, the narrative creates unrealistic expectations for the genuinely code-inexperienced audience it’s marketed toward.

The AI Learning Myth

The video positions Gemini AI as the primary enabler: “I just went to Gemini, hey, can you teach me as a 10-year-old? And then sort of laid out this whole plan” (timestamp 1:58-2:01).

It’s a seductive proposition. If AI can truly teach complex technical skills in compressed timelines, it democratises opportunity. No expensive bootcamps. No CS degrees. Just you, curiosity, and a chatbot tutor.

Nevertheless, when you dig into developer community discussions—the actual practitioners using these tools daily—a different picture emerges.

On Reddit’s programming communities, developers consistently report that “Gemini is terrible at actual coding tasks” with “poor code quality” that “falls significantly short of claims made by Google’s marketing team”. Common complaints include hallucinations (confidently wrong information), persistent errors despite corrections, and outputs that experienced developers immediately identify as flawed.

“Beginners can’t spot these flaws. They lack the domain knowledge to verify whether Gemini’s explanation is accurate, whether the code follows best practices, or whether the architectural approach will cause problems later.”

The catch? Beginners can’t spot these flaws. They lack the domain knowledge to verify whether Gemini’s explanation is accurate, whether the code follows best practices, or whether the architectural approach will cause problems later.

Consequently, AI tools work brilliantly for developers with foundations—they accelerate, augment, and automate routine tasks. But as primary pedagogical resources for complete novices, they’re fundamentally unsuited to the role. Learning requires not just answers but the ability to evaluate answer quality. Without that evaluation capability, beginners absorb AI hallucinations as facts, building flawed mental models that compound over time.

The Psychological Toll of Impossible Standards

Here’s where marketing narrative meets real-world consequence.

When Google amplifies a story suggesting that two weeks of AI tutoring enables production-grade app development, it creates an aspirational benchmark. Aspiring developers see Aniket’s achievement and think: If he did it in weeks, why am I struggling after months?

However, the answer isn’t insufficient passion, inadequate curiosity, or personal failing. Rather, the answer is that the portrayed timeline fundamentally misrepresents the actual effort required.

Research on aspirational marketing warns that when brands “stray too far into the realm of the unattainable, consumers will lose interest”. More damagingly, unrealistic portrayals can lead to “feelings of inadequacy, anxiety, and depression” when people inevitably fail to match impossible standards.

“For aspiring developers—already facing impostor syndrome, technical overwhelm, and steep learning curves—timeline compression in viral content doesn’t inspire. Instead, it demoralises.”

For aspiring developers—already facing impostor syndrome, technical overwhelm, and steep learning curves—timeline compression in viral content doesn’t inspire. Instead, it demoralises.

What Google Could Have Shown Instead

The frustrating part? The actual story is still impressive.

A UX designer developing interest in development, investing serious learning time over the challenge’s eight-week duration, leveraging AI tools as supplement to systematic study, building a functional prototype, and winning recognition from Google—that’s genuinely noteworthy. Indeed, it demonstrates initiative, problem-solving, and strategic tool use.

But that story requires honesty about timeline, acknowledgement of AI tool limitations, and transparency about the actual development process. Moreover, it requires showing code, discussing debugging challenges, and acknowledging the gap between “working prototype” and “production-grade application”.

Consider what authentic educational content might look like:

- Timeline transparency: “Over the challenge’s eight-week period, I dedicated intensive daily study to Android development, with the first two to three weeks focused exclusively on foundational skills through documentation and AI-assisted debugging…”

- Tool positioning: “Gemini helped when I got stuck on specific errors, but systematic learning came from Android documentation and structured tutorials…”

- Process visibility: “Here’s my GitHub repository showing the evolution from basic layouts to API integration, with all the dead ends and refactors along the way…”

- Limitation acknowledgement: “The submitted prototype demonstrated core concept feasibility. I’m still working through integration challenges, and full emotional detection features remain future development goals…”

“This approach maintains the inspirational arc whilst providing the realistic scaffolding that helps others actually achieve similar outcomes. It treats the audience as intelligent adults capable of handling complexity rather than consumers needing oversimplified fairy tales.”

This approach maintains the inspirational arc whilst providing the realistic scaffolding that helps others actually achieve similar outcomes. Furthermore, it treats the audience as intelligent adults capable of handling complexity rather than consumers needing oversimplified fairy tales.

The Comparison That Matters

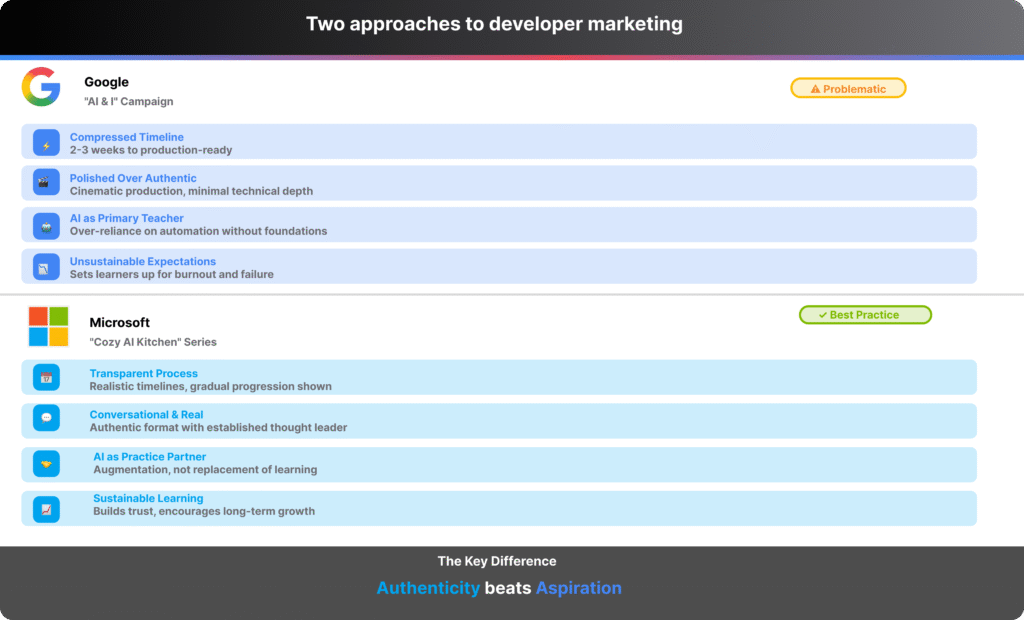

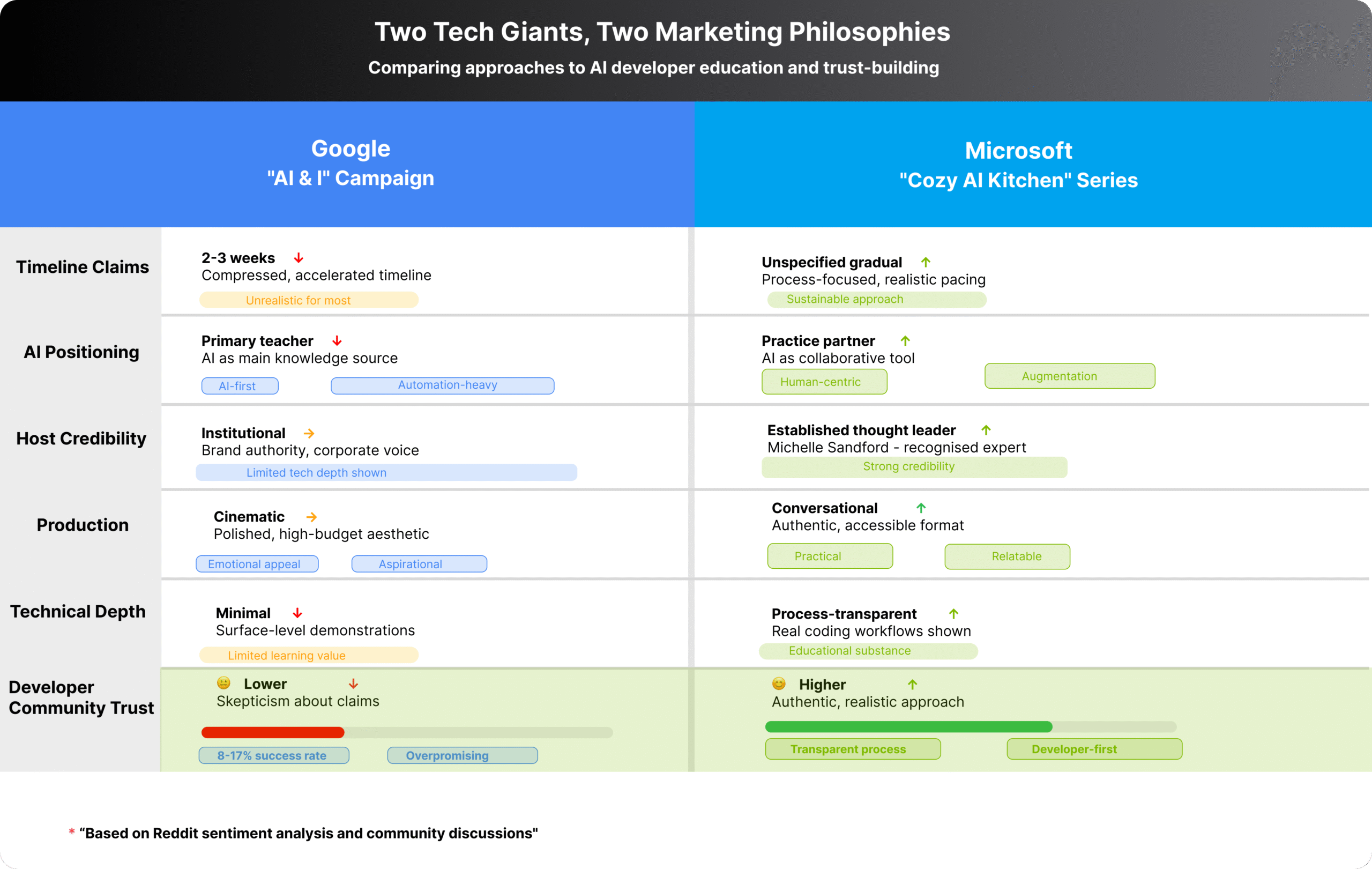

Just couple of days before Google’s video dropped, Microsoft published an episode of their “Cozy AI Kitchen” series featuring Hans Obma—an actor known for Better Call Saul and WandaVision.

Hans discusses using ChatGPT to prepare for a Welsh-language TV interview. But here’s the key difference: he’s already multilingual (Spanish, French, German, Welsh, Russian). Crucially, he uses AI as a practice partner for rehearsal, not as primary language instructor for a complete beginner.

The video explicitly states: “I created [my film project] purely without AI”—clear demarcation that core creative work remained human-driven, with AI used for distribution strategy and communication refinement.

Timeline? Unspecified gradual practice, not compressed weeks. Host credibility? John Maeda, Microsoft VP of Design & AI with established thought leadership. Production style? Informal 13-minute conversation prioritising substance over cinematic spectacle.

Microsoft’s approach demonstrates what developer marketing can look like when it “leads with code, education, and authenticity”. In contrast, Google’s approach demonstrates what happens when production values and aspirational narratives override realistic capability representation.

This contrast matters beyond marketing aesthetics. As I explored in my analysis of OpenAI’s documentary authenticity paradox, when tech companies prioritise emotional storytelling over technical transparency, they risk eroding the very community trust they seek to build.

What This Means for You

If you’re considering learning to code—whether with AI assistance or traditional methods—here’s what matters:

Expect 6–12 months for basic job-ready proficiency in most development domains. Full-time, intensive study can accelerate this. Conversely, part-time learning extends it. But weeks-to-competency timelines exist primarily in marketing content, not educational reality.

“Use AI as supplement, not substitute. Tools like ChatGPT, Gemini, or GitHub Copilot are powerful augmentation for learners with foundations. However, they’re poor replacement for systematic education that builds evaluation capabilities alongside coding skills.”

Use AI as supplement, not substitute. Tools like ChatGPT, Gemini, or GitHub Copilot are powerful augmentation for learners with foundations. However, they’re poor replacement for systematic education that builds evaluation capabilities alongside coding skills.

Seek community validation over corporate testimonials. When assessing learning resources or success stories, prioritise peer reviews, forum discussions, and developers sharing actual code over polished promotional videos. The former builds realistic expectations; the latter sells products.

Measure progress against research-backed benchmarks, not viral content. If industry consensus says six months minimum and you’re at month three feeling inadequate, you’re not behind—you’re exactly on track.

The Responsibility Question

Google’s video has surpassed 1,200 likes and continues growing. The comments glow with inspiration. As engagement accelerates, so does the reach of its compressed timeline narrative. For every person it motivates, how many does it inadvertently mislead? With estimated tens of thousands of views, the scale of potential impact—both positive and harmful—multiplies daily.

The video isn’t technically false—Aniket genuinely won the challenge. Nevertheless, the framing choices—timeline emphasis, AI tool centrality, production polish prioritising emotional arc over technical transparency—create an aspirational benchmark divorced from replicable reality.

This matters because Google isn’t a random YouTuber sharing personal experience. Rather, it’s a trillion-dollar company with ongoing FTC scrutiny over AI capability claims. When Google says “anyone can be a game changer” whilst showcasing impossible timelines, it’s not just marketing—it’s potentially setting regulatory precedent for what constitutes misleading AI product representation.

“The path forward isn’t eliminating inspirational content. Instead, it’s demanding authenticity within aspiration—timelines that acknowledge reality, tool positioning that admits limitations, and process transparency that helps audiences achieve comparable outcomes.”

The path forward isn’t eliminating inspirational content. Instead, it’s demanding authenticity within aspiration—timelines that acknowledge reality, tool positioning that admits limitations, and process transparency that helps audiences achieve comparable outcomes rather than mythologise unreplicable ones.

Similar concerns emerged when I examined Google’s DigiKavach campaign, which succeeded precisely because it balanced aspiration with actionable guidance rather than presenting impossible transformation narratives.

The Real Game Changers

Because when it comes to learning to code, the real game changers aren’t those who do it impossibly fast. Rather, they’re those who do it sustainably, build solid foundations, and help others navigate the actual—not imagined—path to competency.

“The real game changers aren’t those who do it impossibly fast. They’re those who do it sustainably, build solid foundations, and help others navigate the actual—not imagined—path to competency.”

And that story, told honestly, is worth far more than 1200 likes.

For more critical analysis of AI marketing claims and authentic digital storytelling, explore my examination of when testimonials dress up as truth and how Claude Sonnet’s launch navigated the authenticity-hype balance.

Sources & Further Reading

Learning Timeline Research

- This is an IT Support Group, “Learn Programming: 3-12 Month Timeline Guide” (2025)

- Algocademy, “Learning to Code in 3 Months? Here’s Why That’s Unrealistic” (2024)

- Learn With Pride, “How Long Does It Take To Learn Coding? Detailed & Complete Timeline for Beginners” (2025)

- Reddit discussions: “Is it realistic to become proficient in coding within 3 months?” and “What is a realistic timeline to learn to code?“

Kotlin & Android Development

- JetBrains Education, “A Comprehensive Kotlin Learning Guide for All Levels” (April 2024)

- GeeksforGeeks, “Kotlin Android Tutorial“

- Android Developers, “Android Development with Kotlin – Lesson 1: Kotlin basics“

AI Tool Limitations & Developer Experience

- Reddit community discussions on Gemini’s coding capabilities: “Gemini is terrible at actual coding tasks” (July 2025) and “Gemini 3 is not as good as everyone is saying” (November 2025)

Marketing & Authenticity Research

- Forbes Communications Council, “Aspirational Marketing: Balancing Dreams And Reality In Brand Storytelling” (January 2025)

- Elite Business Magazine, “Marketing vs. delivery: Accurate representation & aspirational content“

- Salesforce Blog, “Honest — Not Aspirational — Marketing Is Key To Customer Satisfaction” (October 2023)

Developer Marketing Best Practices

- Daily.dev, “Developer Marketing Q&A: Lessons from Case Studies” (August 2025)

- EveryDeveloper, “How Tech Companies Undermine Their Content Marketing” (August 2025)

- RankRed, “Microsoft Marketing Strategy: 16 Proven Ideas in 2025” (September 2025)

FTC AI Compliance & Regulation

- Federal Trade Commission, “FTC Announces Crackdown on Deceptive AI Claims and Schemes” (September 2024)

- Lathrop GPM, “Transparency and AI: FTC Launches Enforcement Actions Against Businesses Promoting Deceptive AI Claims” (April 2025)

- Law of the Ledger, “You Don’t Need a Machine to Predict What the FTC Might Do About Your AI Product” (February 2023)

Narrative & Authenticity Research

- LinkedIn, “Why the Hero’s Journey Is a Poor Fit for Modern Tech Storytelling” (January 2026)

- MarTech Series, “We Forgot The Hero And It’s Killing Corporate Creativity“

- Trust.io, “Software Testimonials 101: Impact and Experiences Shared” (July 2024)

Primary Sources

- Google for Developers, “Aniket’s Story: AI & I” (January 2026)

- Microsoft Developer, “How Actors Can Use AI to Boost Their Careers, with Hans Obma | Cozy AI Kitchen” (January 2026)

- Microsoft Learn, “Mr. Maeda’s Cozy AI Kitchen” series

- Wales Online, “Hollywood actor learned the Welsh language and loves Wales“

Developer Ecosystem Analysis

- Educative, “Is Microsoft or Google the better ecosystem for developers?” (April 2025)

- VCI Institute, “Google vs. Microsoft: How Two Tech Giants Differ” (October 2024)

Related Articles

- Suchetana Bauri, “When Testimonials Dress Up As Truth: OpenAI Documentary Authenticity Paradox” (December 2025)

- Suchetana Bauri, “Google DigiKavach Campaign Analysis: When Television’s Detective Tropes Meet Digital Fraud Prevention” (August 2025)

- Suchetana Bauri, “Claude Sonnet 4.5 Marketing Analysis: How Anthropic Navigated the AI Launch Hype Cycle” (September 2025)