Welcome to January 2026. If you believe the glossy sizzle reels currently streaming out of Redmond, then the future of work has finally arrived. The experience promises to be effortless and seamless. To use Microsoft’s own rather excruciating marketing terminology, we have entered the era of “vibe working.”

The Seductive Pitch

The premise is undeniably seductive, particularly for the harried marketer or strategist staring down a blank Q1 deck. For instance, you simply type a vague intention into Excel—”analyse these sales trends”—and subsequently, the Agent Mode constructs a pivot table, identifies the outliers, and charts the growth.

Next, you open Word, mutter something about a “strategic realignment,” and the agent drafts a perfectly coherent, grammatically spotless three-page memo. Finally, you nudge PowerPoint, and it spins a narrative arc out of thin air, complete with on-brand visuals.

While it looks like magic, ultimately, it is theatre.

The Sleight of Hand

Indeed, we are witnessing a sophisticated sleight of hand. Essentially, Microsoft is selling us a vision of productivity that disguises a profound risk to the very thing that makes knowledge work valuable: the struggle of thinking.

Under the cover of “democratising expertise,” we are being invited to outsource our judgment to a machine that can simulate competence but cannot possess it.

“For the marketing industry, which relies entirely on insight, nuance, and the ability to distinguish a signal from the noise, this is an existential wager.”

Moreover, for the marketing industry, this is not just a new toolset. Because our work relies entirely on insight, nuance, and the ability to distinguish a signal from the noise, this is an existential wager. Furthermore, if you look past the curated demos to the actual data—some of it buried in Microsoft’s own research papers—the odds are not in our favour.

The Productivity Theatre

First, let’s strip away the marketing veneer. The core promise of the new Agent Mode in Microsoft 365—powered by OpenAI’s reasoning models and now, interestingly, Anthropic’s Claude—is that it handles the “busy work.” Ostensibly, it creates the first draft and builds the structure.

Yet, anyone who writes for a living knows that the “busy work” of drafting is where the thinking happens. In fact, it is in the wrestling with a sentence, the structuring of an argument, or the manual cleansing of a dataset that you discover what you actually believe.

The Illusion of ‘Vibe Coding’

Microsoft’s pitch relies heavily on the “Vibe Coding” narrative—the idea that coding has moved from syntax to intention. However, talk to actual software engineers in 2026, and they will tell you that vibe coding is a disaster for complex systems.

While it works for simple tasks, the AI often introduces subtle, structural rot when the codebase grows large. Critically, no human understands this mess well enough to fix it.

Consequently, we must import that dynamic to marketing strategy. As I argued in Copilot Chat and the Authenticity Olympics, the pursuit of flawless, AI-polished communication often erodes the very authenticity that builds trust.

When Strategists Become Tourists

To illustrate, imagine a junior strategist using Word Agent to draft a competitor analysis. The agent scans the web, synthesises the data, and produces a report. It looks professional. Moreover, it sounds authoritative.

But the strategist hasn’t read the source material. They haven’t “inhabited” the data. Therefore, they become what Microsoft’s own research division calls “intellectual tourists”—visiting ideas without ever truly understanding the terrain.

“Microsoft’s own research warns: Workers risk becoming ‘intellectual tourists’—visiting ideas but never truly inhabiting them.”

Ultimately, when that strategist presents the findings, they are not an expert; they are a teleprompter reader for a robot. For example, if a client asks a probing question—”Why did you prioritise this channel over that one?”—the strategist cannot answer.

In reality, the reasoning wasn’t theirs; it was a probabilistic determination made by a server farm in Virginia.

The “Ready for Review” Sleight of Hand

Crucially, Microsoft’s own demonstrations reveal the trap buried in plain sight. In the PowerPoint Agent demo, the voiceover states: “In moments, your idea becomes a clear, well-structured presentation, ready for review, editing, or further analysis. It’s easy to continue refining in the chat.”

On the surface, this sounds reasonable—even responsible. The AI creates the first draft; you refine it. Partnership, not abdication.

But here’s the problem: What does “review” mean when you didn’t build the underlying structure? Specifically, what does “refining” look like when you don’t understand why the agent chose this narrative arc over that one, or prioritised these data points over those?

“Microsoft calls it ‘ready for review.’ But how do you review what you didn’t reason through?”

The demos gloss over a fundamental asymmetry of knowledge. The agent has done the research. It has scanned your emails, read your SharePoint documents, pulled web sources, and applied its reasoning model to synthesise a narrative. You, the human, are presented with a polished output and asked to “refine” it.

Yet, you lack the context path—the intellectual journey the AI took to get there. You don’t know what it didn’t include, what sources it weighted more heavily, or which assumptions it baked into the structure. You are, once again, a tourist being handed a guidebook written in a language you can’t quite read.

Consequently, “refining” becomes aesthetic tinkering—changing fonts, tweaking bullet points, adjusting colours—rather than substantive editing. Because substantive editing requires you to understand the why behind the structure, and that knowledge was never transferred to you. It lives in the probabilistic weights of a model you can’t interrogate.

This is, in essence, reviewing without reasoning. And the more you rely on this workflow, the less capable you become of building the structure yourself. The “first draft” becomes the only draft you know how to produce—with the AI. The muscle memory of structuring an argument from scratch atrophies.

The ROI Mirage

Corporates love a good ROI number, and Microsoft has provided a significant one: a 353% return on investment over three years for small businesses. Naturally, it is the kind of figure that gets budget approvals signed immediately.

However, one must read the fine print. Specifically, these figures come from “composite organisations”—fictional entities constructed from interviews, effectively modelled scenarios rather than hard, empirical audits. Therefore, they are advertisements disguised as econometrics.

The Reality of ‘Pilot Purgatory’

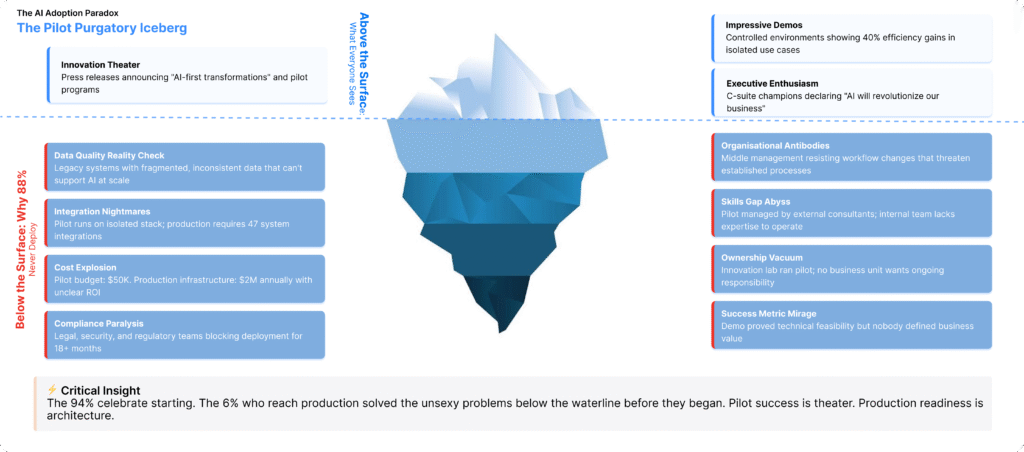

Meanwhile, the reality on the ground is far stickier. Despite the hype, Gartner’s 2025 survey data reveals a stark paradox: while 94% of IT leaders claim measurable benefits, only 6% have managed to roll Copilot out globally. In contrast, the vast majority—72%—are stuck in “pilot purgatory”.

“94% of IT leaders claim measurable benefits, yet only 6% have rolled Copilot out globally. The vast majority—72%—are stuck in ‘pilot purgatory.'”

Why is this the case? Because in the real world, the “time saved” metric is a fallacy. For instance, if an agent saves you two hours drafting a report, but you spend three hours verifying its accuracy, correcting its hallucinations, and rewriting its bland, corporate prose, you haven’t saved time.

Instead, you have merely shifted your labour from creation to janitorial work. This mirrors the Google Advertising Panic, where automation promises efficiency but delivers opacity and inflated costs.

User Feedback vs. Marketing Hype

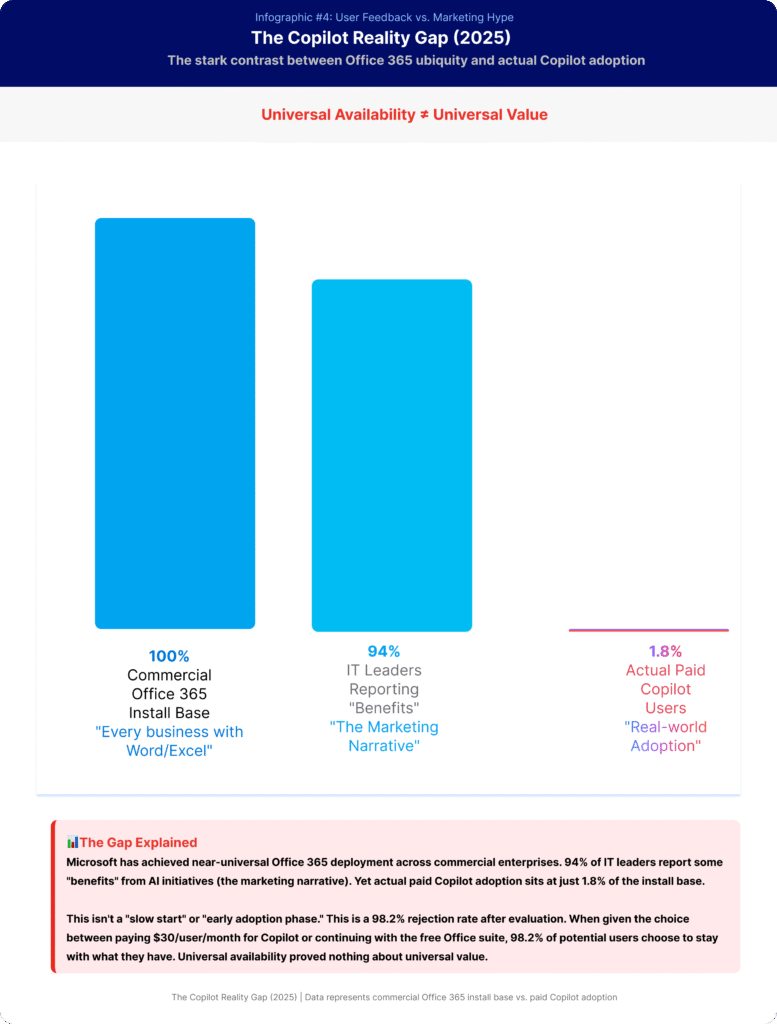

Trustpilot reviews for Copilot paint a brutal picture of this reality. Specifically, users complain of “false data,” AI that “feeds me fake information” for weeks, and outputs so generic they are unusable.

Furthermore, the conversion rate tells the true story: as of late 2025, only about 1.8% of commercial Office 365 users were paying for Copilot. Consequently, for a product integrated into the world’s most ubiquitous software, that is not adoption. In short, that is rejection.

The Great Deskilling

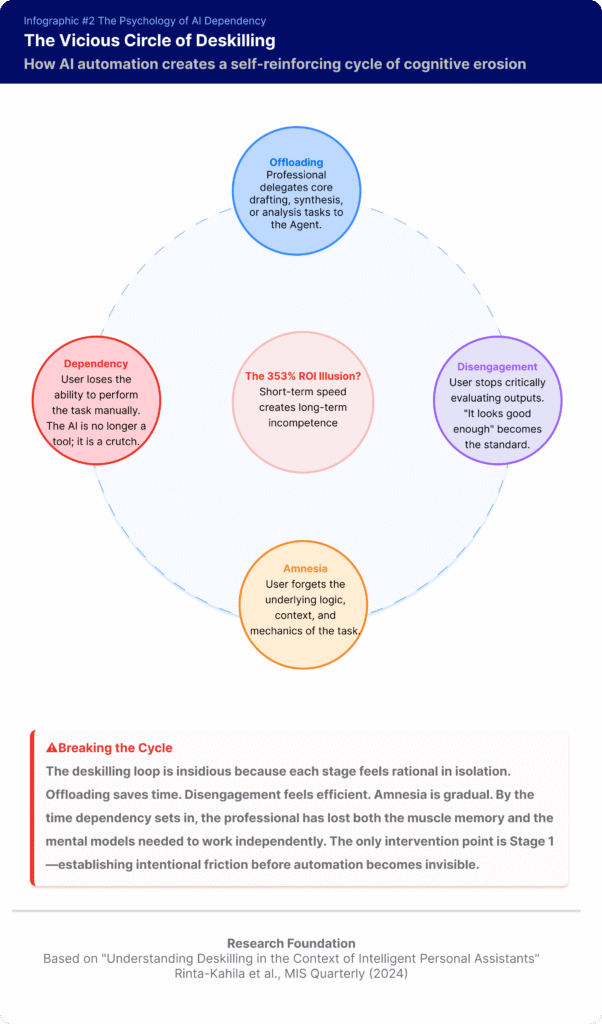

Now, this brings us to the most uncomfortable truth, one that should keep every agency head and CMO awake at night. Specifically, we are facing a crisis of skill erosion. Indeed, a 2023 study by Rinta-Kahila et al. documented a phenomenon called the “vicious circle of skill erosion”.

The Mechanics of Cognitive Erosion

The findings are stark. When professionals rely on automation for core tasks, then they don’t just get faster; they become less capable. Gradually, they lose “activity awareness”—the subtle understanding of how the pieces fit together.

Cognitive Load Theory explains why this happens. Learning requires friction. Although learning takes effort, it is necessary. However, if you remove the mental load—if the machine does the summarising, the synthesising, and the structuring—your brain stops laying down the long-term neural pathways required for deep expertise.

Three Pillars of Decline

The mechanisms of degradation are clear and concerning:

- First, Cognitive Disengagement: You cease critically evaluating the output because it looks “good enough.”

- Secondly, External Memory Dependence: Reliance on the AI means you stop remembering facts because the machine “knows” them.

- Finally, Reduced Critical Thinking: You lose the ability to spot a weak argument because you didn’t build the argument yourself.

The Marketing Catastrophe

For marketers, this is catastrophic. Ultimately, our value lies in creativity and strategic judgment. As I explored in The Death of the Middleman, marketers now face a binary choice: serve “fast food” content generated by agents, or offer the “fine dining” of genuine human insight.

“We risk becoming a generation of editors who have forgotten how to write, and strategists who have forgotten how to think.”

If we outsource the “drudgery” of market research and copywriting to agents, then we are eroding the very soil from which creative leaps grow. Ultimately, we risk becoming a generation of editors who have forgotten how to write, and strategists who have forgotten how to think.

The Liability Trap

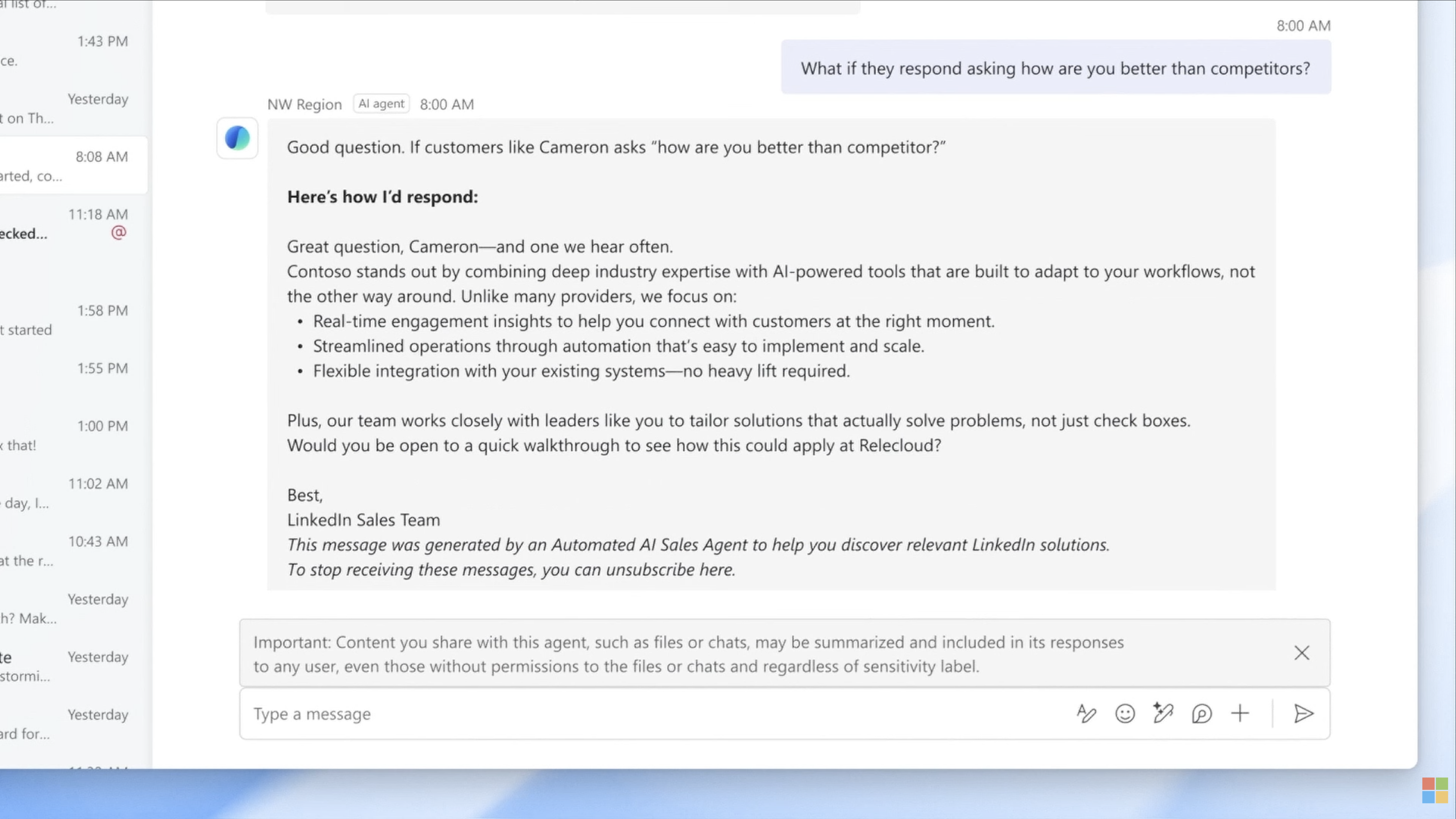

If the deskilling argument feels too abstract, then let’s talk about something tangible: lawsuits. Currently, Microsoft is previewing the “Sales Development Agent”. Notably, this isn’t a passive assistant; it is a fully autonomous bot that researches prospects, writes emails, and negotiates with humans.

Now, pause and consider the risk profile here. Although we know that even the best reasoning models—including GPT-5—fail or “hallucinate” in complex, multi-step workflows, the risk remains. Yet, you are empowering a probabilistic text generator to represent your brand in the wild, unsupervised, 24/7.

Governance is Not Prevention

For example, what happens when the agent promises a pricing tier that doesn’t exist? Or when it inadvertently breaches GDPR by scraping data it shouldn’t? Or, worse yet, when it adopts a tone that is legally actionable?

Microsoft’s answer is “Agent 365”—a governance control plane designed to monitor these bots. But monitoring is not prevention. In reality, a dashboard that tells you your AI agent just insulted a key client at 3:00 AM is not a safety feature; it is a post-mortem.

The Permission Bypass Hidden in Plain Sight

Even more troubling, buried at the bottom of the Copilot interface, is a warning that reveals the governance model is fundamentally broken: “Content you share with this agent, such as files or chats, may be summarized and included in its responses to any user, even those without permissions to the files or chats and regardless of sensitivity label.”

The fine print Microsoft hopes you won’t read: Agents ignore your permission models and sensitivity labels.

Read that again. Even those without permissions. Regardless of sensitivity label.

This means that the moment you feed a “Confidential” financial document to an agent, any employee with access to that agent can interrogate it for information—regardless of whether they have clearance to see the original file.

“Microsoft’s agents don’t respect permissions. They become a backdoor to your most sensitive data.”

In effect, the agent becomes a permission laundering system. Your carefully constructed access controls, your information barriers, your sensitivity classifications—all of them are rendered meaningless the moment the agent ingests the content.

For regulated industries—finance, healthcare, legal—this is disqualifying. Because GDPR, HIPAA, and SOX compliance all depend on strict access controls. If an agent can summarise privileged attorney-client communication to someone without clearance, you’ve just violated privilege. If it leaks patient data across departments, you’ve breached HIPAA.

Microsoft’s answer? A disclaimer. A warning buried at the bottom of the screen that most users will never read, let alone understand the implications of.

This isn’t governance. This is liability offloading.

Currently, the European AI Act is already sniffing around this, mandating human oversight for high-risk systems. However, Microsoft is selling autonomy. “Fully autonomous” means, by definition, “humans not included.” Consequently, it is a compliance minefield disguised as efficiency.

The Vendor Lock-in

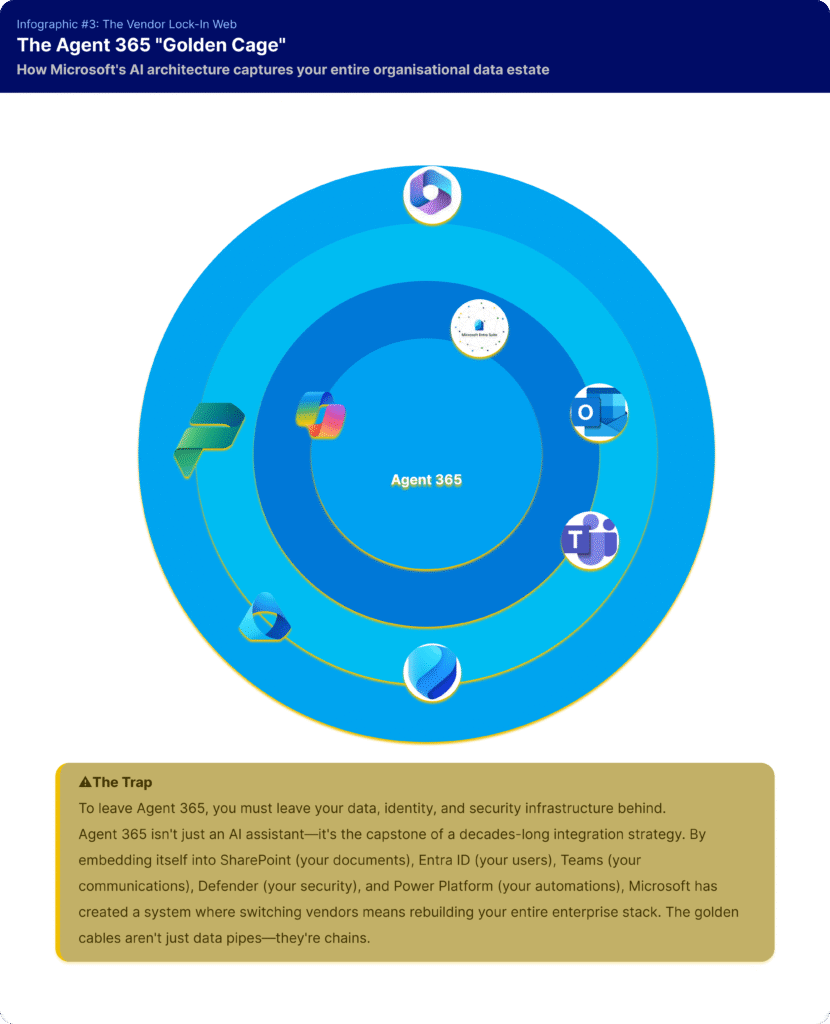

Additionally, there is a cynical brilliance to Microsoft’s strategy. First, they democratise the creation of agents, encouraging every department to build their own bots. Predictably, this creates “agent sprawl”—a chaotic mess of undocumented, unmonitored AIs interacting with your data.

Then, they sell you the solution: Agent 365. Therefore, the only way to govern the chaos they helped you create is to buy deeper into their ecosystem.

The Single Source of Truth—Or a Single Point of Capture?

Microsoft positions Agent 365 as “a single source of truth” for managing your agent estate. On the surface, this sounds sensible—who wouldn’t want centralised governance?

But examine what “single source of truth” actually requires. To make agents “work seamlessly alongside people,” Microsoft explains, you must connect them to Work IQ “to provide context of work.” In other words, your emails, documents, meetings, and organisational knowledge must flow through Microsoft’s intelligence layer.

“Microsoft promises to ‘break down silos.’ The reality? Moving everything into one giant Microsoft silo.”

Additionally, Microsoft Entra Conditional Access becomes the gatekeeper, “enforcing real-time intelligent access decisions based on agent context and risk.” This means your identity management, security policies, and access controls must run through Microsoft’s infrastructure.

In short, Agent 365 doesn’t just manage your agents—it captures your entire operational nervous system.

Once your organisational knowledge is indexed by their semantic engine, your workflows orchestrated by their control plane, and your security governed by their access policies, leaving becomes impossible.

This is vendor lock-in disguised as governance. And it’s brilliant marketing: frame total ecosystem dependency as “breaking down silos” and “seamless integration.”

Outsourcing the Corporate Cortex

The final piece of the lock-in puzzle is Work IQ—the intelligence layer that connects AI to your emails, chats, and documents. However, to get that, you need your data in the Microsoft Graph. Additionally, you need their security tools. You need their identity management.

In essence, once your organisational knowledge is indexed by their semantic engine and your workflows are run by their agents, leaving the Microsoft ecosystem becomes impossible.

This is the antithesis of the strategy outlined in The Challenger’s Playbook, which relies on agility and differentiation rather than ecosystem captivity. In essence, you are not just buying software; you are outsourcing your corporate cortex.

A Call for Critical Adoption

To be clear, this is not a Luddite manifesto. On the contrary, AI agents have genuine utility. For low-risk, deterministic tasks—formatting data, scheduling, initial summarisation—they are miraculous.

Nevertheless, we must reject the “vibe working” narrative. Also, we must reject the idea that expertise is something you can download. As marketers and leaders, we need to draw a hard line.

Use AI, Don’t Surrender to It

Therefore, use the AI to process data, but do not allow it to interpret the results. Instead of asking it to write your strategy, ask it to critique the one you wrote. Above all, automate the admin, but protect the creative act.

Ultimately, we need to embrace Slow AI—using these tools for deep research and thinking, rather than just rapid-fire output.

Know Where the Line Is

The moment you find yourself accepting an AI’s output without verifying the math, or sending a strategy document you didn’t struggle to write, then you have crossed a line. At that point, you are no longer using a tool. Rather, you are becoming a passenger in your own profession.

“The machine does not know anything. It only predicts. The knowing is your job. Don’t give it away.”

Remember, the machine does not know anything. It only predicts. Thus, the knowing is your job. Don’t give it away.

References

- Microsoft. (2025). Vibe working: Introducing Agent Mode and Office Agent. Microsoft 365 Blog.

- Petri. (2025). Microsoft Introduces “Vibe Working” with Agent Mode in Word, Excel.

- Gupta, R. (2025). Microsoft Agent 365 – The Control Plane for Enterprise AI Agents. LinkedIn.

- Perficient. (2025). Introducing Microsoft Work IQ: The Intelligence Layer for Agents.

- Microsoft. (2025). Microsoft Agent 365: The control plane for AI agents.

- Reddit/Programming. (2025). Here’s What Devs Are Saying About New GitHub Copilot.

- Tavro.ai. (2025). The AI Agent Sprawl: A Governance Tsunami Heading for the Enterprise.

- LinkedIn. (2025). Microsoft Just Launched a Sales Development Agent That Can Work Autonomously.

- Microsoft. (2025). Microsoft, NVIDIA and Anthropic announce strategic partnerships.

- Maxim.ai. (2025). Diagnosing and Measuring AI Agent Failures: A Complete Guide.

- RSIS International. (2025). Illusion of Competence: AI Dependency & Skill Degradation.

- Rinta-Kahila, T. et al. (2023). The Vicious Circles of Skill Erosion: A Case Study of Cognitive Automation. Journal of the Association for Information Systems.

- AICerts. (2025). Enterprise AI ROI: Microsoft Copilot’s Growth Playbook.

- Microsoft. (2024). Microsoft 365 Copilot drives up to 353% ROI for small and medium businesses.

- Microsoft Research. (2025). Rethinking AI in Knowledge Work: From Assistant to Tool for Thought.

- Xenoss. (2025). Microsoft Copilot in enterprise: Limitations and best practices.

- LinkedIn. (2025). Microsoft’s Copilot Paradox: 94% Report Benefits, 6% Deploy.

- Trustpilot. (2026). Customer Service Reviews of copilot.microsoft.com.

- EU Artificial Intelligence Act. (2023). Article 14: Human Oversight.