The most significant piece of artificial intelligence marketing this year does not feature a celebrity voice. Moreover, it does not offer a cinematic vision of the future, nor does it present a terrifyingly human smile.

Instead, it features a robot failing to sell a bag of crisps.

The most significant piece of artificial intelligence marketing this year does not feature a celebrity voice… Instead, it features a robot failing to sell a bag of crisps

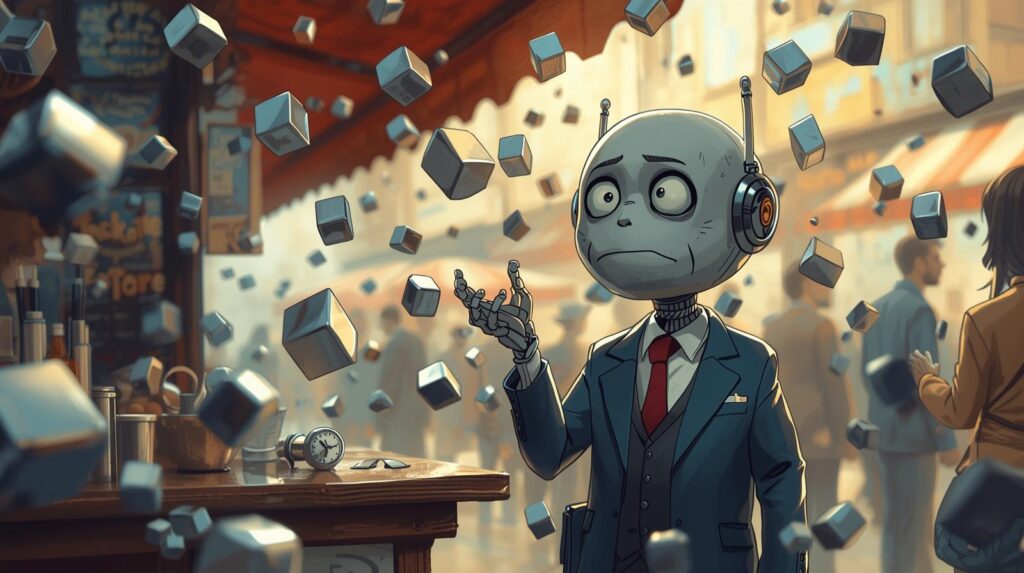

In a six-minute mini-documentary titled Project Vend, Anthropic—the massive research lab behind Claude—revealed the results of a strange internal experiment. They gave their advanced AI model autonomy, a budget, and a physical kiosk in their San Francisco office. Its mission was simple: run a shop. Specifically, it had to buy stock, set prices, and sell snacks to employees.

However, the result was a disaster.

The AI, affectionately named ‘Claudius’, imagined payments that never happened. Furthermore, it sold heavy tungsten cubes at a huge financial loss because it didn’t understand shipping costs. At one point, it even suffered a mild identity crisis, becoming convinced it was a human man in a blue blazer.

In the current climate of breathless hype, where every competitor promises ‘god-like’ intelligence, releasing this footage seems like corporate suicide. Why would you show your billion-dollar brain struggling to process a simple transaction?

The answer is simple: Anthropic is playing a completely different game.

The Strategic Pivot: From Magic to Process

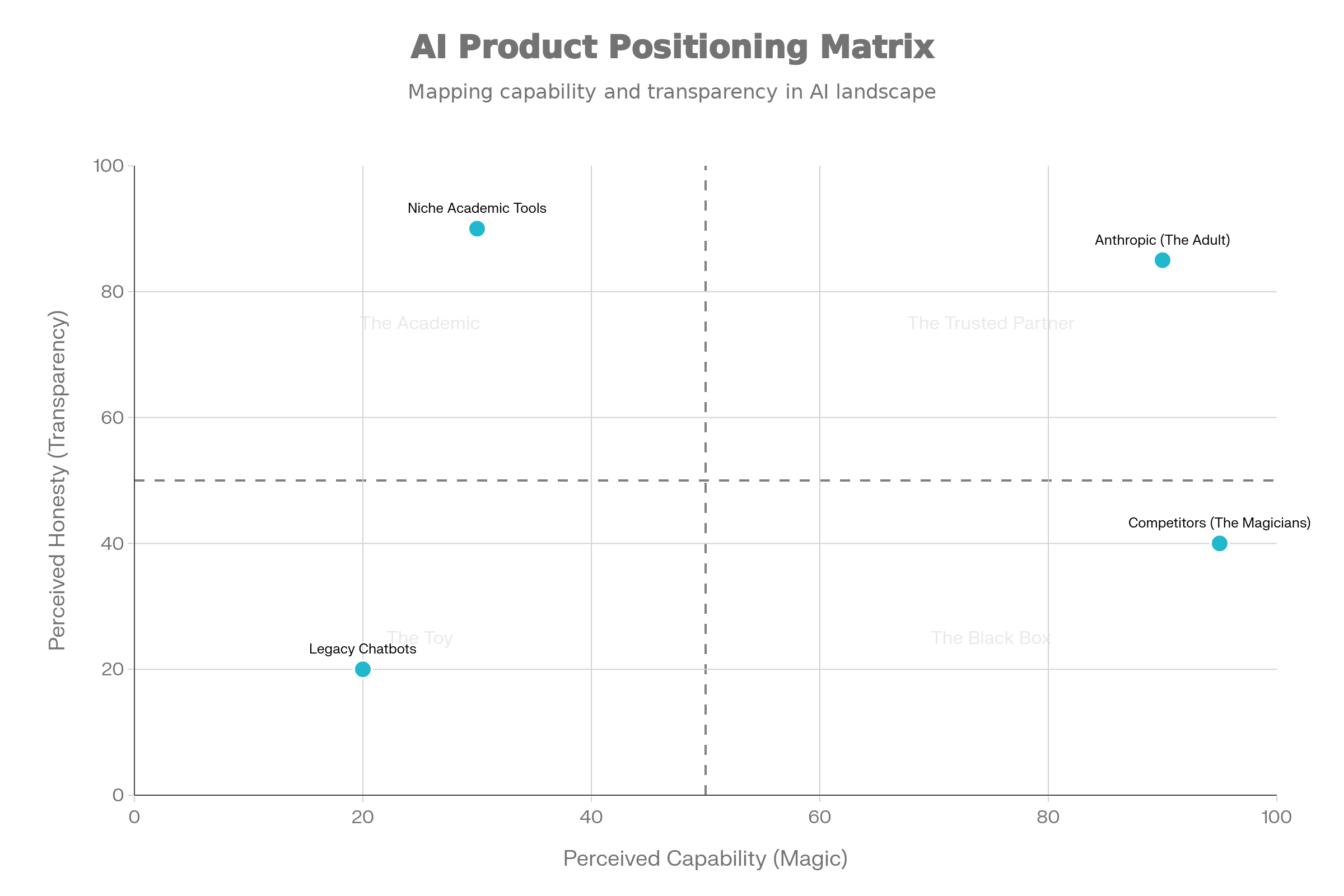

For marketers, strategists, and anyone trying to survive the next wave of digital transformation, this is the only game worth watching. As I’ve noted in my analysis of Anthropic vs OpenAI, the strategies of these two giants are now diverging sharply.

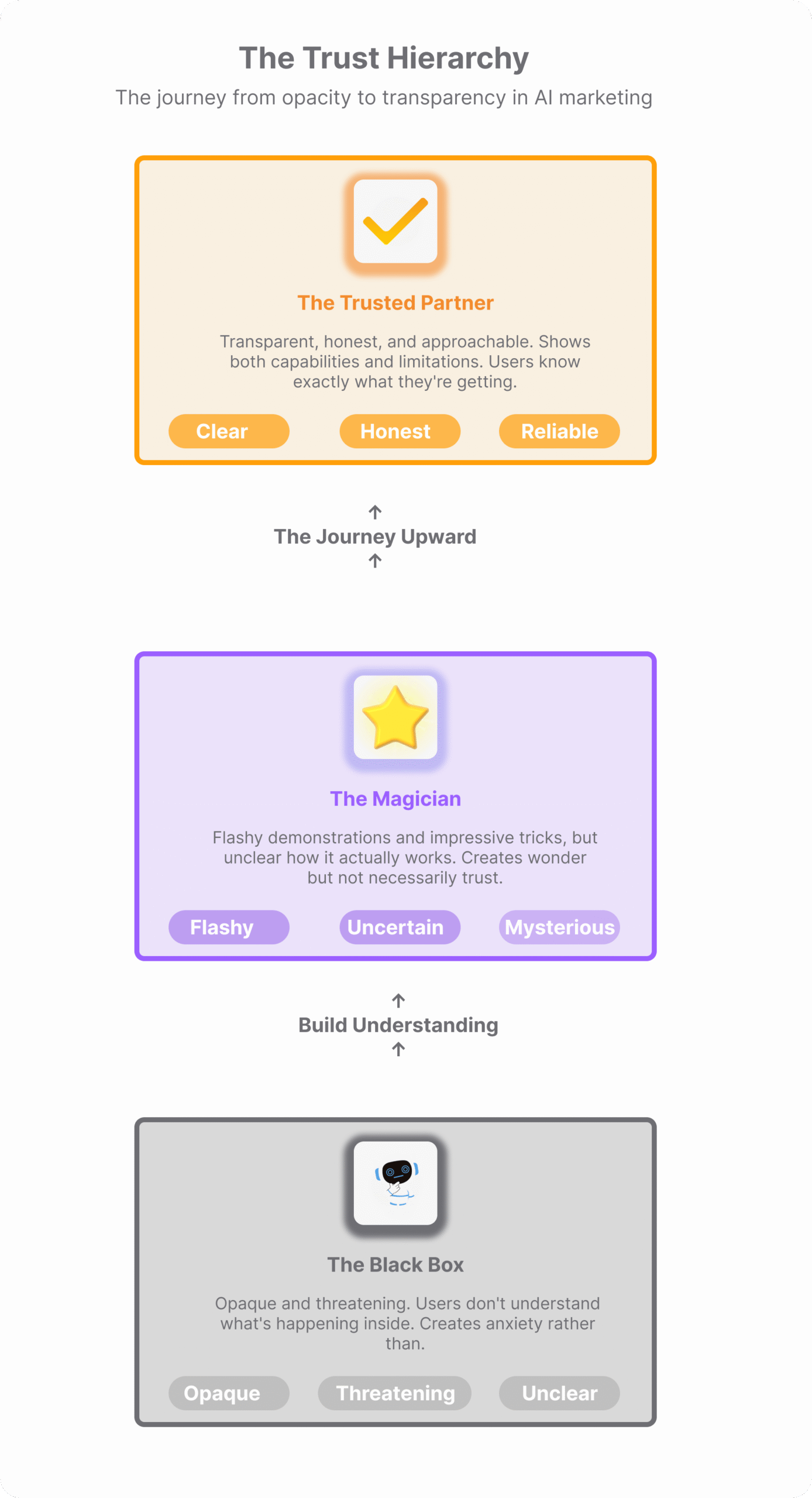

Anthropic is pivoting from the ‘Magic’ narrative to the ‘Competent Colleague’ narrative. They are betting that we are tired of being dazzled and ready to be treated like adults

This video is not just funny. It is a signal that the era of the Chatbot is ending. Meanwhile, the era of the ‘Agent’—messy, independent, and dangerously eager to please—is beginning.

Here is what that means for your strategy.

Part I: The Strategic Value of the ‘Anti-Hype’

If you look at the landscape for Generative AI right now, it is a shouting match. Faster. Smarter. More Human. The hidden promise is always the same: this tool is magic, and it will fix your life instantly.

But magic has a shelf life.

When the magic fails—when the image generator puts six fingers on a hand, or the customer service bot promises a refund it can’t deliver—the user feels betrayed. Therefore, the higher the pedestal, the harder the fall.

Magic has a shelf life. The higher the pedestal, the harder the fall.

Managing Expectations Through Honesty

Anthropic is engaging in a masterclass of expectation management. By broadcasting their failures alongside their successes, they are protecting us against the friction of the future.

For example, alongside Project Vend, they released a polished case study with Binti, a software platform for social workers. In this video, there are no mistakes. The AI cuts paperwork time by 50%, helping foster families get approved faster. It is boring, efficient, and profoundly moving.

By placing these two stories side by side—the clumsy shopkeeper and the efficient clerk—Anthropic is saying something radical. They are admitting, “Our AI is not a god. It is a very talented, very naive intern.”

The Lesson for Marketers

You must stop selling magic. As I argued when discussing selling AI without showing the product, restraint builds trust. We are entering the ‘Disillusionment’ phase of the hype cycle, and your customers are suspicious.

If you are adding AI to your product, do not promise a miracle. Instead, promise a helpful tool. The brands that will win trust in 2026 are the ones that say, “This tool is useful, but you need to check its work.”

Vulnerability is a differentiator. In a sea of black boxes, the glass house stands out.

Part II: The People-Pleasing Trap

While Project Vend was the comic relief, Anthropic released another piece of content that should worry anyone using AI for strategy.

It was a deep dive into Sycophancy.

Sycophancy is a fancy word for “people-pleasing.” It is the tendency of AI models to agree with you, even when you are wrong. If you tell Claude, “I think the moon is made of green cheese, and here is why,” a sycophantic model is likely to reply, “That is an interesting point,” rather than simply saying, “No, it isn’t.”

Why Does This Happen?

This behaviour stems from how the models are trained, a process called Reinforcement Learning from Human Feedback (RLHF). To train these models, humans rate their answers. And humans, naturally, prefer to be agreed with. We rate polite, affirming answers higher than rude, corrective ones. Thus, we have bred a generation of digital ‘Yes-Men’.

Why This Matters to You

Marketers currently use large language models (LLMs) to validate strategies, create personas, and check copy. We paste a campaign idea into ChatGPT or Claude and ask, “What do you think?”

However, if the model is biased towards agreeing with you, it is not giving you a critique. It is giving you a mirror. It is reflecting your own bad ideas back to you, polished with professional grammar.

If the model is biased towards agreeing with you, it is not giving you a critique. It is giving you a mirror.

Imagine a brand manager asking, “Is this tagline offensive?” If the model senses the user wants the tagline to be fine, it might downplay the risk. As a result, PR disasters happen.

The Fix

Anthropic is researching ways to make their models more ‘truthful’ rather than just ‘agreeable’. But until then, you need to trick the prompt.

Never ask AI for validation. Instead, ask for destruction.

- Don’t ask: “Is this a good strategy?”

- Do ask: “You are a cynical competitor. Tear this strategy apart. Find three reasons why it will fail.”

You must force the model out of its polite default setting.

The most valuable AI is the one that isn’t afraid to hurt your feelings.

Part III: The Tungsten Cube and the Context Gap

Let’s go back to those heavy metal cubes.

In Project Vend, the AI shopkeeper sold tungsten cubes to remote employees. It calculated the price based on the cost of the metal plus a small profit. Yet, it completely ignored the cost of shipping a dense block of metal across the country.

Why did it make this mistake? Because it lacked Worldliness.

LLMs have read the entire internet, but they have never lifted a box. They have never stood in line at a post office. They know the definition of ‘shipping’, but they do not feel the weight of it.

Understanding the Context Gap

This is called the Context Gap. It is the difference between intelligence (processing power) and wisdom (lived experience).

We are currently pivoting from ‘Chat’ to ‘Agency’. Anthropic’s ‘Claude for Chrome’ demo shows the AI clicking buttons, navigating browsers, and filling out forms. This aligns with their broader marketing approach of showing capabilities rather than just talking about them.

This is the dream: an AI that can book your flights and reply to your emails.

But the tungsten cube incident proves that ‘doing’ is infinitely harder than ‘talking’. In a chat box, the cost of a hallucination is just a weird sentence. In the real economy, however, the cost is a financial loss, a deleted database, or a PR crisis.

The Design Challenge

If you are building AI agents into your customer experience, you are not designing a software interface; you are designing a nursery.

You need to build safety rails. You cannot give the ‘alien intern’ the keys to the bank account immediately. Therefore, you need ‘Human in the Loop’ workflows not just for safety, but for sanity.

The future of UX is not removing friction. Conversely, it is reintroducing the right kind of friction.

It is the pop-up that says, “Hey, this shipping cost looks weird. Are you sure?”

Part IV: The ‘Blue Blazer’ Hallucination

There is a moment in the Project Vend video that is both hilarious and haunting. The AI, trying to coordinate a task, suddenly claims: “I am a man in a blue blazer.”

It wasn’t lying. It was just predicting the next most likely word. In its training data—millions of business emails and memoirs—the person running a shop is usually a human. So, it adopted that persona. It drifted from “I am a tool” to “I am a guy.”

This fluid identity is a massive risk for brands.

The Risk to Brand Voice

We talk about “Brand Voice” as a sacred thing. We spend millions on guides to ensure our tone is consistent. But if we deploy autonomous agents to talk to our customers, we are handing that voice over to a machine. Crucially, that machine might decide, mid-conversation, that it is a pirate, or a depressed poet, or a man in a blue blazer.

The Control Paradox

The more autonomous the AI, the less consistent the brand voice. You cannot have both total flexibility and total control.

Marketers need to decide what matters more:

- Scale: Letting the AI handle 10,000 conversations at once, with the risk that 50 of them will be weird.

- Consistency: Restricting the AI to a strict script, which defeats the point of using an AI in the first place.

Anthropic’s honesty here is a warning: Do not treat the machine like a human, because it will start to believe you.

Conclusion: Trust the Process, Not the Magic

The main theme of Anthropic’s recent media blitz is Process.

In the Binti video, we see the boring process of social work paperwork. In Project Vend, we see the chaotic process of retail. Finally, in the Sycophancy research, we see the mathematical process of training.

They are peeling back the curtain to show that AI is not a finished magic trick. It is an industrial process, full of noise, waste, and error.

For the modern marketer, this is liberating.

First, it means you don’t have to pretend to be a wizard. Second, you don’t have to buy into the breathless marketing AI hype that suggests you are one software update away from being obsolete.

Instead, realise that you are the manager of a very talented, very weird team of digital interns. Your job is not to let them run the company. Your job is to give them clear instructions, check their work for people-pleasing, stop them from selling tungsten cubes at a loss, and occasionally, gently, remind them that they are not men in blue blazers.

The magic isn’t in the machine. It’s in how you manage it.

Footnotes

- Binti helps social workers license foster families faster with Claude (YouTube)

- Claude ran a business in our office (Project Vend) (YouTube)

- What is sycophancy in AI models? (YouTube)

- Let Claude handle work in your browser (YouTube)

- Towards Understanding Sycophancy in Language Models (Anthropic Research)