Two years ago, Google got caught faking a demo. Not embellishing. Not selectively editing. Faking. The company’s December 2023 showcase for Gemini—showing a seemingly spontaneous multimodal interaction where the AI played rock-paper-scissors through gesture recognition—turned out to be a carefully choreographed sequence of text prompts fed into still images. TechCrunch called it what it was: Google’s best Gemini demo was faked.

When challenged, a DeepMind executive clarified that the video showed what the experience “could look like” and was designed “to inspire developers.” In other words, this was Silicon Valley speak for: we showed you aspiration and called it capability.

This week, Google released five new promotional videos for Gemini 3 Flash, its latest model launch. On the surface, they’re immaculately produced. Moreover, they showcase compelling use cases—golf swing analysis, small business automation, personalised tutoring. Yet despite this polish, they’ve learned precisely nothing from that 2023 debacle.

“We showed you aspiration and called it capability.”

For marketers navigating the AI gold rush of 2025, these videos aren’t just a case study in what not to do. Rather, they’re a diagnostic for a deeper pathology afflicting tech marketing right now: the growing, dangerous chasm between what we demonstrate and what we deliver.

Why This Matters Now

We’re at an inflection point. To begin with, the consumer AI market has already been won. OpenAI has 800 million weekly users who’ve integrated ChatGPT into their actual workflows, warts and all. In fact, that’s more than Netflix, Spotify, and Disney+ combined. As a result, ChatGPT has become part of the infrastructure of how people work, study, create, and solve problems. It’s about to become a verb—just the way Google became in the past 20 years.

“ChatGPT is about to become a verb—just the way Google became in the past 20 years.”

Meanwhile, 75% of agentic AI tasks fail in production. Additionally, user satisfaction data shows Gemini scoring 50% one-star ratings with zero five-star ratings.<sup>5</sup> Consequently, the gap between demo theatre and operational reality has become so pronounced that developers have created frameworks<sup>6</sup> specifically to tell credible AI products from vapor-ware.

This isn’t 2023 anymore. For instance, the audience that once gasped at GPT-3 completing sentences now rolls its eyes at hallucinated citations. Similarly, the consumers who once found any AI capability impressive now have two years of daily usage informing their expectations. As a result, they know what works. They know what fails. Furthermore, they’ve developed habits around ChatGPT. The hype cycle hasn’t just matured—it’s curdled.

And yet here’s Google, serving up the same flavour of aspirational demonstration that audiences have learned to mistrust. Therefore, it’s not just bad marketing. Rather, it’s strategically incoherent when you’re trying to displace an incumbent that already owns habitual usage.

Much like the Swiggy Wiggy 3.0 campaign that succeeded by centring real people over polished corporate messaging, effective tech marketing in 2025 requires authentic demonstration over aspirational theatre.

What the Videos Actually Show

The four use-case videos run between 27 seconds and just over a minute. Structurally, they’re identical: someone with a problem, Gemini’s intervention, instant transformation.

The Scenarios

First, a golfer uploads swing footage and receives biomechanical analysis. Next, a small business owner transforms a spreadsheet of customer complaints into a drafted email and a coded, branded website. Finally, a student records themselves explaining quantum mechanics; Gemini identifies knowledge gaps and generates a bespoke quiz.

On one hand, the scenarios are plausible enough to avoid immediate incredulity but specific enough to raise questions. On the other hand, the production values are corporate-glossy: professional lighting, seamless motion graphics, soundtracks that suggest effortless competence. Nevertheless, what they all share is an absence: no visible friction, no iteration, no failure modes, no acknowledgement that these are aspirational demonstrations rather than typical user experiences.

More importantly, they share another absence: no explanation of why you’d switch from ChatGPT to do any of these things.

The Authenticity Problem

This is what researchers call the “UGC Illusion”—studio-made authenticity designed to feel spontaneous whilst maintaining total story control. Typically, brands cast “relatable” people, write scripts that sound conversational, and introduce deliberate imperfections like slightly wonky camera angles. In theory, the strategy works until audiences notice the artifice, at which point it breeds precisely the distrust it sought to dispel.

However, Google’s videos don’t even attempt this level of manufactured authenticity. Instead, they’re unapologetically polished corporate communications. In principle, this would be fine—product launches warrant professional production—except AI in 2025 faces a unique credibility problem. Specifically, research shows that human voice-overs reduce cognitive load and increase purchase intentions compared to AI-generated voices, precisely because authenticity has become scarce and therefore valuable. As AI-generated content saturates the web, audiences increasingly hunger for markers of genuine human involvement.

“As AI-generated content saturates the web, audiences increasingly hunger for markers of genuine human involvement.”

Notably, these videos contain no such markers. No real users. No testimonials. No acknowledgement of limitations. Furthermore, they present AI as frictionless, infallible, instantaneous—precisely the claims that two years of operational reality have rendered suspect. This is reminiscent of the issues I explored in The Pixel-Perfect Lie: Maybelline’s Mumbai Mirage, where CGI perfection ultimately undermined brand credibility.

The Consumer Positioning Problem

Here’s what makes Google’s approach particularly baffling: these videos are clearly targeting individual consumers—golfers perfecting their swing, students mastering subjects, small business owners automating tasks. Crucially, they’re not targeting enterprises. Not developers. Regular people.

The Incumbent Advantage Nobody’s Addressing

However, OpenAI already owns this space. With 800 million weekly users, ChatGPT has become the default AI assistant for consumers. Indeed, it’s embedded in workflows, bookmarked in browsers, referenced in casual conversation. People say “I’ll ask ChatGPT” the way they once said “I’ll Google it.”

Moreover, those 800 million users have spent two years discovering what AI can and cannot do. Along the way, they’ve encountered hallucinations, experienced failures, learned to prompt carefully, and developed sophisticated expectations. Importantly, they’ve built habits. In practice, ChatGPT is already their study partner, their coding assistant, their writing coach, their research tool.

Consequently, Google’s videos don’t answer the consumer’s actual question: “Why should I switch from ChatGPT?”

“Google’s videos don’t answer the consumer’s actual question: ‘Why should I switch from ChatGPT?'”

What Consumers Actually Need to Know

When targeting individuals who already use AI regularly, the questions aren’t about capability demonstrations. Rather, they’re far more practical:

- Is it faster than ChatGPT? Show me timestamps, not claims. Specifically, put them side-by-side answering the same question and let me see the difference.

- Is it more accurate? Show me comparison tests. In particular, what does ChatGPT get wrong that Gemini gets right? Be specific.

- Does it work better on mobile? Given that 70% of consumer AI usage happens on phones, this matters. Yet all of Google’s videos show desktop interfaces. Show me the actual mobile app experience.

- What does it cost? Be transparent about free tier limits. After all, consumers are savvy about freemium models now. They know the catches exist.

- Can it do something ChatGPT genuinely can’t? Not “also does” but “uniquely does.” In essence, what’s the moat? What’s the killer feature that justifies switching costs?

- Will my data be private? ChatGPT has had two years to build (or erode) trust on this front. Therefore, where does Google stand?

- Can I migrate my conversation history? Because switching costs are real. Address them.

Unfortunately, Google’s videos answer precisely none of these questions. Instead, they showcase aspirational scenarios—a golfer perfecting their swing, a student mastering quantum mechanics—without demonstrating why Gemini does this better, faster, or more reliably than what people are already using.

The Demo-Reality Chasm

Consider the small business video. A woman with customer feedback in a spreadsheet uploads it to Gemini. Within seconds: data analysis, drafted launch email, generated website code complete with branding. On the surface, the transformation is seamless. The interface is frictionless. The output is perfect.

The Questions Nobody’s Asking

Now consider what the video doesn’t show. First, how much pre-prompting occurred before this “instant” result? Second, how many iterations were needed? Third, does the generated code actually function across browsers and devices? Fourth, what happens when the data contains ambiguities, typos, or contradictory customer requests? Moreover, how many tokens did this operation consume—and at $0.50 per million input tokens and $3 per million output tokens, what does this workflow actually cost at scale?

Here’s the more important question: Can ChatGPT already do this? Because if it can—and for most of these use cases, it can—then the video isn’t demonstrating capability. Instead, it’s demonstrating parity. And parity doesn’t overcome switching costs.

“Parity doesn’t overcome switching costs.”

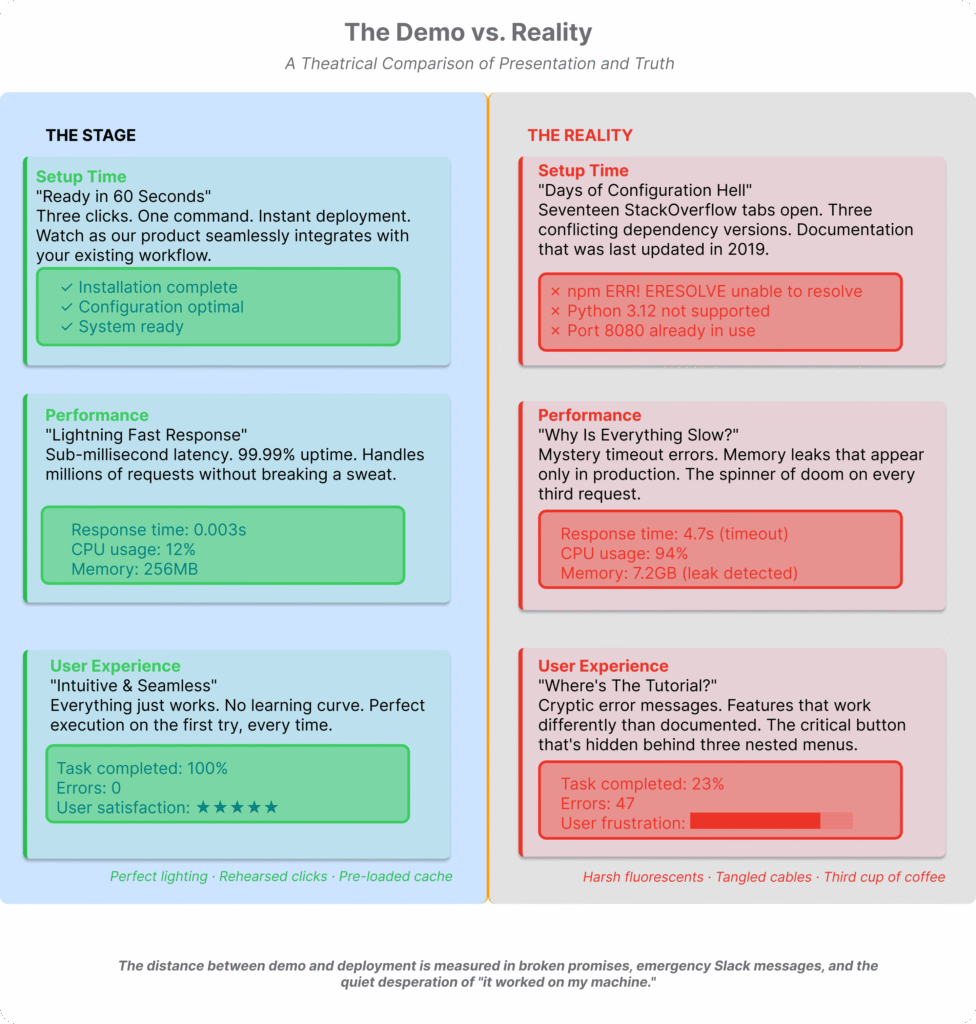

In essence, these aren’t pedantic technical objections. Rather, they’re the questions that separate marketing theatre from operational reality. Indeed, the AI industry has developed a predictable rhythm: spectacular demonstration, followed by underwhelming deployment, followed by quiet acknowledgement of limitations.

The Eight-Question Framework

As one developer put it on LinkedIn: “AI based products have very wide gaps between demo and reality. It’s very easy to spin up a quick, impressive demo but really hard to have an operational product that handles real-world cases robustly.”

Subsequently, the developer proposed eight questions for telling credible AI products from vaporware:

- Can it solve a real problem end-to-end? (Not staged sandbox flows)

- Does it execute actions or just generate outputs?

- How does it handle complexity and exceptions?

- Where is proof of performance? (Hard numbers, not vague claims)

- What is the user experience like? (Friction, robotic responses)

- How fast does it improve?

- How accurate is it really?

- What happens when it fails?

Notably, Google’s videos answer precisely none of these questions. Instead, they showcase the happy path—the scenario where everything works perfectly, where the user’s needs align exactly with the model’s capabilities, where there are no edge cases or ambiguities or errors. In other words, they showcase fiction.

“They showcase the happy path. In other words, they showcase fiction.”

Moreover, they ignore the ninth question that matters most for consumer adoption in 2025: Why is this better than what I’m already using?

The Cost of Omission

In reality, user reports from Reddit and Hacker News paint a more complicated picture than Google’s videos suggest. For example, Gemini “performs poorly in coding, only handling basic tasks”. Additionally, it gives “incorrect answers to basic maths questions.” It experiences “slow response times” on higher thinking levels. Furthermore, it generates inconsistent code.

Real-World Testing

One particularly telling experiment on Hacker News illustrates this: a user asked various models a niche but answerable question about sports history. Repeatedly, Gemini hallucinated entire matches, reported draws as wins, and contradicted its own generated facts within the same response. “Fancy Markov chains on steroids,” the user concluded.

Moreover, independent testing reveals that whilst Gemini 3 Flash achieves impressive scores on academic tests like GPQA Diamond (90.4%), it simultaneously demonstrates high hallucination rates and falls outside what researchers call the “most desirable quadrant” when plotting accuracy against knowledge coverage.

What’s Missing

None of this appears in Google’s promotional videos. There are no disclaimers about accuracy rates. No acknowledgement of error modes. No discussion of the iterative refinement that real-world use cases require. Additionally, there’s no mention that the seamless workflows depicted might require multiple attempts, careful prompt work, and human oversight to achieve.

Therefore, this isn’t just incomplete marketing. Rather, it’s strategically self-defeating. Because the audience these videos target—consumers who already use ChatGPT daily—are precisely the people who know what’s been left out. After all, they’ve spent two years discovering that AI routinely makes mistakes, requires iteration, and needs oversight. Consequently, they’ve built sophisticated expectations about what’s realistic.

“Showing them frictionless perfection doesn’t inspire confidence. Instead, it signals that Google doesn’t understand actual usage patterns.”

As a result, showing them frictionless perfection doesn’t inspire confidence. Instead, it signals that Google doesn’t understand actual usage patterns, which makes them question whether the product was built for real users at all.

The “Good Enough” Problem

Here’s the reality: ChatGPT is already good enough for most consumer use cases. For instance, a student can already ask it to quiz them on quantum mechanics. Similarly, a business owner can already use it to analyse data and draft emails. Likewise, a golfer can already upload swing videos to various AI tools for analysis.

When Better Isn’t Enough

This is the strategic challenge Google’s videos completely ignore: incremental improvement doesn’t overcome entrenched habits. In fact, behavioural economics has decades of research showing that switching costs are real and sticky. Essentially, people don’t migrate from working solutions unless the alternative is demonstrably, dramatically superior—or addresses a pain point the incumbent doesn’t.

“Incremental improvement doesn’t overcome entrenched habits.”

However, Google’s videos showcase neither. Instead, they show capabilities ChatGPT already offers, performed with similar (claimed) ease, without acknowledging that switching requires consumers to:

- Learn a new interface

- Rebuild their prompt libraries

- Lose their conversation history

- Change their bookmarks and workflows

- Trust a company that faked a demo two years ago

- Accept uncertainty about whether this new thing actually works better

Clearly, that’s a significant cognitive and practical burden. Therefore, the videos needed to justify that burden by showing clear, measurable superiority. They didn’t. Instead, they showed aspirational scenarios that could just as easily be ChatGPT demos with different branding.

The Amazon Alexa Comparison

Remember when Amazon released Alexa ads showing families seamlessly ordering groceries, controlling lights, and getting weather updates? Those ads worked because they showed something consumers couldn’t already do easily. In other words, voice-controlled home automation was novel and genuinely useful.

In contrast, Google’s Gemini videos show tasks consumers can already accomplish—and many have been doing so daily for two years via ChatGPT. So where’s the novelty? Where’s the step-change improvement? Where’s the feature that makes someone think, “I need to try this”?

Ultimately, the videos never answer this, which suggests Google itself doesn’t have a clear answer. That’s not a marketing problem. It’s a product positioning crisis.

“That’s not a marketing problem. It’s a product positioning crisis.”

What Comes Next?

Google’s Gemini videos fail to persuade consumers to switch because they don’t address the fundamental question: Why abandon an AI tool that already works? The answer requires an entirely different marketing playbook—one that acknowledges switching costs, demonstrates clear differentiation, and treats consumers as sophisticated evaluators rather than blank slates.

In the next piece, we’ll explore how successful challenger brands actually displace incumbents, and what Google should have done instead. Because the playbook exists. Google simply didn’t follow it.

References

- Google’s best Gemini demo was faked

- Gemini 3 Flash: frontier intelligence built for speed

- OpenAI vs. Anthropic: Contrasting AI Business Models and Market Strategies

- Why 75% of Agentic AI Tasks Fail in 2025 (And How to Fix It)

- ChatGPT vs Claude vs Gemini (2025): A Comprehensive Comparison

- AI product demos vs reality: the gap between

- The UGC Illusion: How Brands Create ‘Authentic’ Videos in Professional Studios

- The effectiveness of human vs. AI voice-over in short video advertising

- Gemini 3 Flash: Frontier intelligence built for speed