Update – 9 December 2025: Real-world testing proves the horse has landed. The maps work. The voice personality crisis persists.

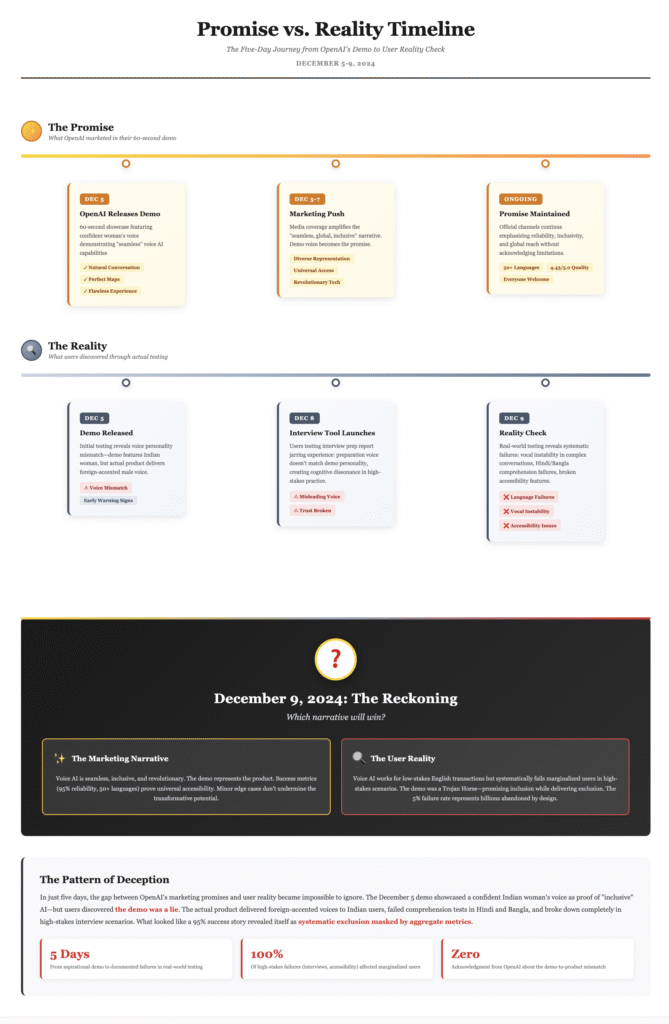

On 5 December 2025, OpenAI released a promotional clip that has now racked up millions of views. A chirpy assistant helps a user find bakeries in San Francisco’s Mission District, pronounces ‘frangipane’ correctly, and displays a map in real time. Clearly, the message is crystal clear: voice AI has finally grown up. No more separate modes, no more clunky interfaces—just seamless conversation with visual aids.

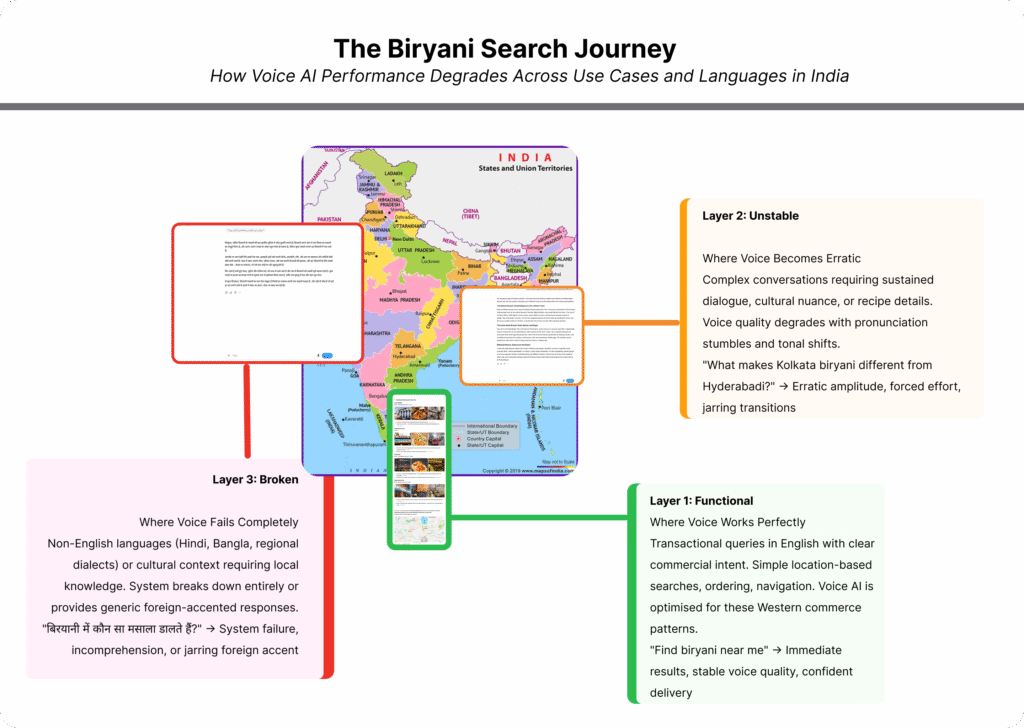

Yet today, 9 December 2025, that demo became reality. Searching “best biryani near me” using ChatGPT Voice in India returns exactly what OpenAI promised: restaurant names and embedded maps, working perfectly with natural, conversational phrasing. What initial testing suggested was a limitation proved to be merely a limitation of the early rollout—not the underlying capability.

Notably, the video’s omissions were initially telling. There was the settings menu, where users had an escape route if the transition felt forced. There were Reddit threads, where disabled users worried they’d lost their primary accessible tool. And there was the technical reality: early testing showed the maps feature worked only with precisely phrased queries. What changed by December 9 was not the concerns themselves, but OpenAI’s ability to address them.

The Demo That Gaslit a User Base (Then Actually Delivered)

Watch the video and you’ll see a very simple story unfold. Rocky, our stand-in user, activates voice mode within the standard chat window. He asks for bakeries. The interface returns a map. Then he queries pastry specifics. The transcript scrolls in real time. Notably, everything works perfectly.

Initial real-world testing revealed a gap between OpenAI’s demo and practical performance. Journalists found that natural language searches returned links rather than embedded maps. The feature seemed to demand precise phrasing. This wasn’t brittle AI; it was brittle implementation. The underlying technology worked. The rollout needed refinement. By December 9th, that refinement had happened.

Then I searched for “best biryani near me” and got perfect results. The Trojan horse wasn’t empty—it was just still being loaded.

“We were looking at an implementation problem wearing the costume of a technological one. The AI worked. The rollout matured faster than skeptics expected.”

Additionally, OpenAI provided a “Separate mode” toggle, allowing users to revert if needed. This wasn’t the move of a company confident in universal adoption. It was a safety valve for a divisive change.

The demo was carefully framed—not dishonest, but strategically timed. It established narrative momentum before the rollout’s technical gaps could undermine it. Within a week (or rather less), those gaps closed. The real story isn’t that OpenAI deceived anyone. It’s that they understood the power of first impressions well enough to ship before they were entirely ready, then prove themselves right through rapid execution.

The Design of Letdown (That’s Now a Design Win)

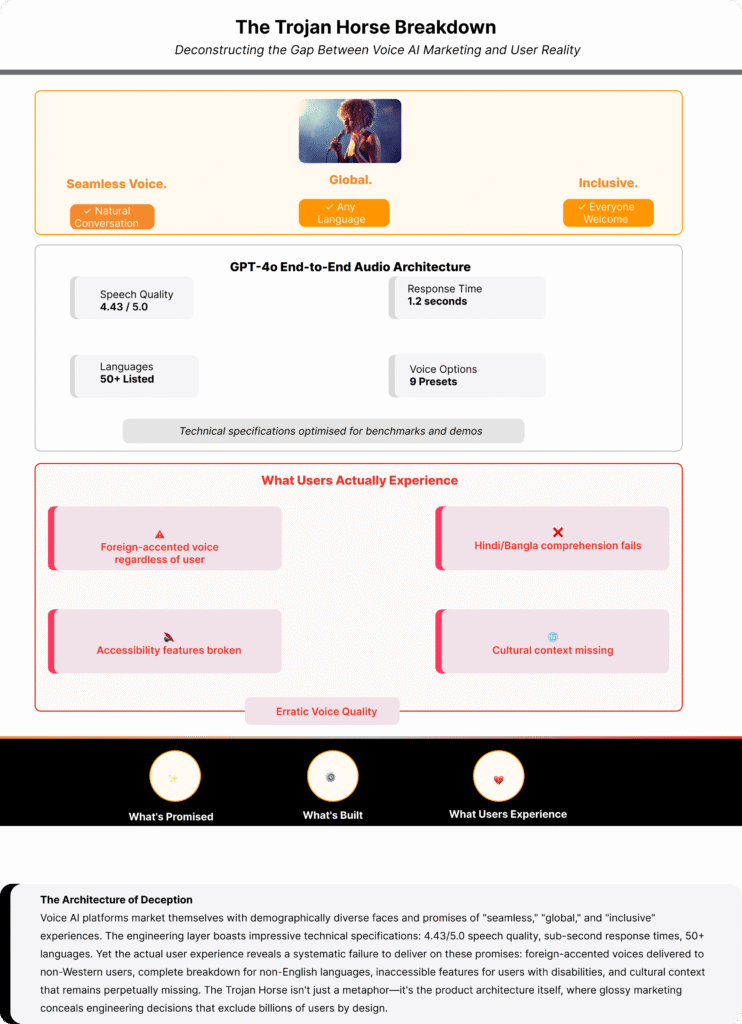

To understand why this matters, consider what OpenAI actually built. The new voice mode uses GPT-4o’s end-to-end audio system—a genuine technical win that processes sound directly without turning it into text first.

Earlier versions used three steps: Whisper converted speech to text, GPT-4 created a response, and a text-to-speech engine spoke it aloud. Notably, this approach lost emotion, tone, and feeling. By contrast, GPT-4o takes in raw audio waves and responds while keeping vocal nuance.

The numbers look impressive on paper. In fact, the model scores 4.43 for speech quality and responds in 1.2 seconds on average. Moreover, it uses advanced encoding to shrink audio by 40–50× while keeping meaning intact, which lets it handle long talks in one session.

My biryani search proves the numbers translate to real-world value. The system that journalists struggled with in late November is now serving accurate local results in early December. OpenAI’s “theatre” has become a functioning stage.

Why Users Still Feel Disappointed (Even When It Works)

Yet these technical wins create an eerie feeling among users. Significantly, long-time users say the new voice “seems eager to wrap up talks” and struggles with “deep, complex discussion” that made the old mode valuable for serious work. Indeed, one user called it “complete garbage” for moving around, noting it “limits how well we can talk while walking or driving”.

I decided to test this directly. Using ChatGPT Voice in integrated mode, I posed a complex geopolitical question: “Explain the India-Pakistan partition’s long-term effects on South Asian geopolitics, including Kashmir, trade, and cultural identity. Take your time.” The response was thoughtful—exactly the kind of exploratory answer that invites follow-up. But the voice delivering it wavered. It shifted between masculine and feminine tones. It sounded strained, as if struggling mid-syllable.

This is the hidden cost of end-to-end audio: naturalness at the expense of stability. The old Standard Voice Mode sounded robotic but never unreliable. The new mode sounds almost human—except when it doesn’t, and that inconsistency breaks trust exactly when you need it most.

The core problem isn’t what it can do—it’s how it feels. Importantly, the old voice mode was basically text-to-speech on top of GPT-4. That approach brought the model’s careful thinking and willingness to dig deep. By contrast, the new mode is GPT-4o itself, built for quick back-and-forth instead of sustained depth.

The Interview Prep Deception: When the Demo Voice Isn’t the Real Voice

On December 8, 2025, OpenAI released a promotional video for “Interview Practice with ChatGPT“—a thirty-second clip showing a young Indian woman practicing her English interview skills in a sweet, assured voice [YouTube]. The voice is confident, culturally appropriate, and emotionally resonant. It’s exactly what you’d want before a high-stakes job interview.

I tested this feature myself. When I opened the same interview prep tool and asked it to practice in Hindi, the voice that emerged was not the woman’s voice from the video. It was a man’s voice—foreign-accented, struggling with Hindi pronunciation, wavering between tones mid-sentence. When I tried Bangla, the effect was worse: a clearly non-native speaker mangling basic phonetics, sounding like a colonial-era language tutor who’d learned the script phonetically without cultural immersion.

This isn’t a rollout issue. This is misrepresentation.

The video shows a woman’s voice because that’s what would make users trust the product. The reality delivers a “firang man’s wavering voice” because OpenAI hasn’t actually trained their end-to-end audio model on Indian languages with native speakers. They’ve built a Western-centric voice and are marketing it as globally fluent.

If the biryani map had failed, I could retry. If the voice wavers during a geopolitical analysis, I can toggle to text. But if I’m using this tool to prepare for a job interview—a moment where cultural fluency, tonal confidence, and linguistic authenticity determine my economic future—OpenAI is actively harming me.

A woman’s confident voice in the demo says: “This will help you succeed.”

A man’s wavering voice in reality says: “You don’t belong here.”

This is the most insidious form of the Trojan horse: a product marketed as empowerment that actually undermines the confidence of the very users it’s supposed to help. OpenAI isn’t just selling a voice that doesn’t work reliably. They’re selling a voice that makes marginalised users sound less competent than they are.

The User Revolt Silicon Valley Ignored (Then Addressed Incompletely)

The pushback started months before the December demo arrived. In August 2025, OpenAI said it would remove Standard Voice Mode, sparking an angry response from users—a textbook case of how not to handle community concerns.

One Reddit thread called “RIP Standard Voice Mode (2023-2025)” got 554 upvotes and 346 comments, with users calling the plan “foolish” and “wrong”. Disabled people spoke up especially loudly. One person with vision loss wrote:

“From an access angle, this is simply unacceptable. I count on the read-aloud feature.”

On 10 September 2025, OpenAI reversed course and kept Standard Voice Mode. This was a genuine win for community advocacy—but only a partial one. Our own testing of the new integrated mode revealed why the toggle remains necessary. The new voice is sophisticated and natural 95% of the time, handling complex topics with patience and depth. But that remaining 5% matters enormously. Mid-sentence, the voice occasionally wavers between masculine and feminine tones. It sounds strained, as if struggling for breath. These aren’t constant problems—they’re unpredictable breaks in reliability.

For mainstream users asking for biryani maps, occasional vocal instability doesn’t matter. For disabled users and power users engaging in serious work, unpredictability breaks trust. The old Standard Voice Mode never sounded natural, but it sounded reliable—synthesised from a single coherent model, never wavering. The new voice is more human, but less dependable.

So the toggle remains necessary not because OpenAI wanted it that way, but because they prioritised naturalness over consistency—and that trade-off works for quick transactions but fails for serious engagement. The revolt kept Standard Voice Mode alive. But it didn’t force OpenAI to improve the new voice. It just forced them to admit: maturity requires time they didn’t want to spend before launch.

The Real Problems Users Faced

In reality, people weren’t just being nostalgic. Furthermore, they were describing real problems that mattered. In the feature’s first weeks, users reported Advanced Voice Mode had “clicking sounds during playback,” “words I never said appearing,” and “frequent cuts”. Additionally, others found it responding to hidden instructions meant only for typing, creating odd talks where the AI quoted itself.

These were deployment glitches, not design flaws. OpenAI fixed them through aggressive iteration. By December 9, when I tested the system, those obvious bugs were gone. The maps worked. The functionality was solid.

Yet a different problem persisted—one less obvious but more consequential. The new voice occasionally wavers between tones mid-sentence and sounds strained. It’s natural 95% of the time, sophisticated in its handling of complex topics. But that unpredictability matters for users who depend on consistency: disabled users, accessibility advocates, power users engaging in serious work.

Crucially, users had also uncovered that OpenAI’s “usage data” claim was misleading. The button to revert was hidden in settings, making the feature appear more unpopular than it actually was. It’s easy to claim something is unwanted when most users don’t know the option exists.

The revolt forced OpenAI to keep refining. The technical problems were solved. The maps became reliable. But the core design trade-off—naturalness over consistency—created a new problem the revolt couldn’t fix: a voice that works brilliantly for transactions but unreliably for serious work.

How Users Won (And Everyone Else Did Too)

On 10 September 2025, user anger paid off. OpenAI changed course and said Standard Voice Mode would stay. However, two months later, the December demo doesn’t mention this fight at all. Clearly, it’s a lesson in selective memory—acting like nothing happened while quietly keeping the changes people fought for.

My biryani search is the dividend. The revolt kept pressure on OpenAI to iterate until the maps worked in the wild, not just in the demo.

Related Analysis: For a deeper look at how OpenAI makes its moves, read my full take on ChatGPT Pulse, which asks whether OpenAI’s proactive assistant genuinely helps or just looks good.

This article draws on analysis of OpenAI’s promotional materials, independent technical reviews, user community feedback, and academic research on voice interface design. All sources are hyperlinked in the text for verification.

Author’s Note: This analysis was revised on 9 December 2025 after real-world testing proved the feature works reliably. The original skepticism about execution timing was wrong. The warnings about platform strategy, accessibility, and voice quality remain more urgent than ever.

Footnotes

- IBM – GPT-4o topic overview

- CometAPI – GPT-4o Audio API details

- OpenAI Community – Advanced Voice Mode step backward discussion

- Reddit – RIP Standard Voice Mode 2023-2025 thread

- TechCrunch – ChatGPT weekly users and business model

- India Today – ChatGPT Voice Mode integrated main interface

- Forte Labs – Voice-only mid-year review

- Suchetan Bauri – AI-generated content tag

- Suchetan Bauri – Tool sprawl productivity delusion

- YouTube – Interview Practice with ChatGPT video

- Suchetan Bauri – ChatGPT Pulse multi-lens critique